Fine-tuning - Making AI Your Personal Assistant

Updated: October 28, 2025

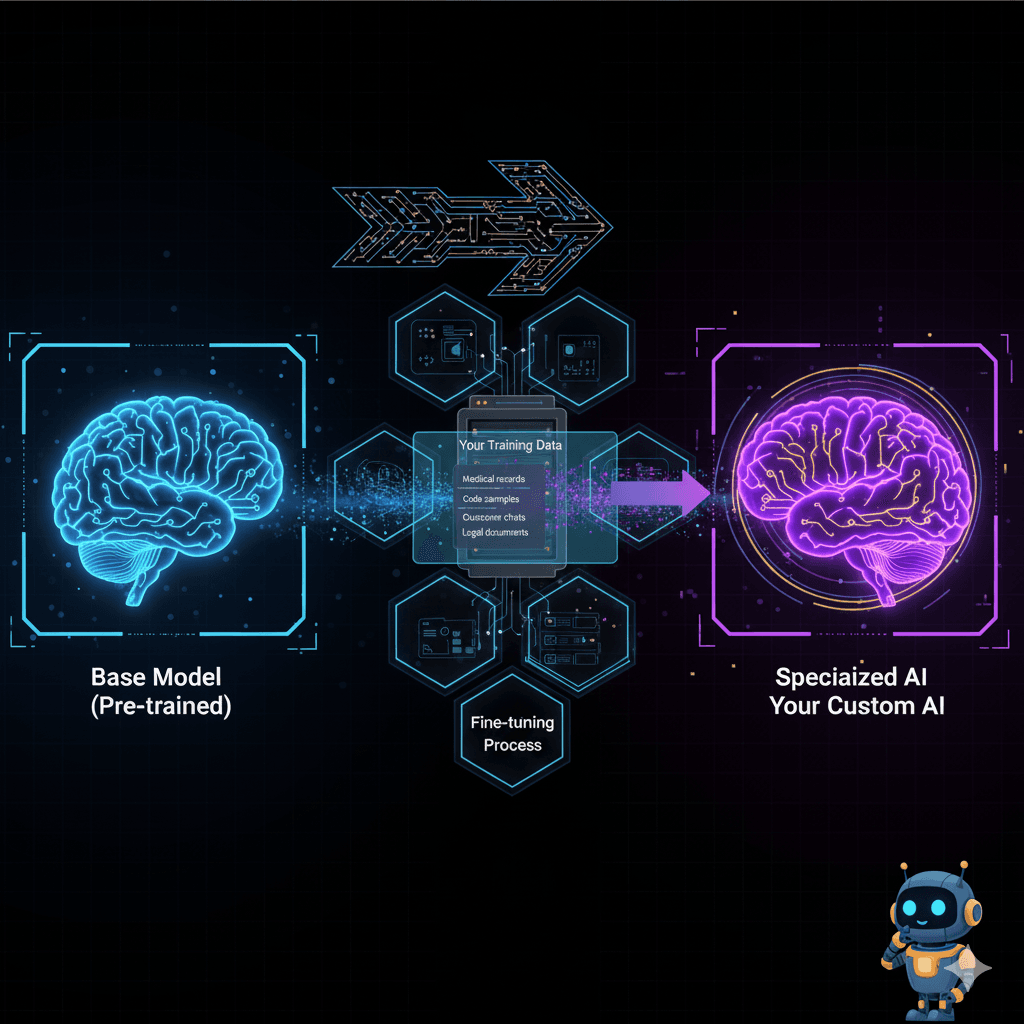

Fine-tuning is like getting a suit tailored. You start with a quality off-the-rack suit (pre-trained model), then customize it to fit you perfectly (specialized knowledge).

👔The Tailored Suit Analogy

Off-the-rack Suit (Pre-trained Model)

- •Fits most people okay

- •Generic style

- •Good quality

- •Ready to wear

Tailored Suit (Fine-tuned Model)

- ✓Fits you perfectly

- ✓Your style preferences

- ✓Same quality base

- ✓Customized details

Real Examples of Fine-tuning Success

Example 1: Medical Diagnosis Assistant

Example 2: Legal Document Analyzer

Example 3: Customer Service Bot

The Fine-tuning Process: Step by Step

Step 1: Choose Your Base Model

Like choosing a car to modify:

- • Efficient, reliable

- • Great for simple tasks

- • Easy to modify

- • Balanced performance

- • Versatile

- • Most popular choice

- • Maximum performance

- • Expensive to run

- • For specialized needs

Step 2: Prepare Your Training Data

My actual example from the PostgreSQL project:

{

"conversations": [

{

"from": "system",

"value": "You are a PostgreSQL expert."

},

{

"from": "human",

"value": "How do I handle connection pooling?"

},

{

"from": "assistant",

"value": "For PostgreSQL connection pooling, you have several options:

1. **PgBouncer** (most popular):

- Lightweight and efficient

- Three pool modes: session, transaction, statement

- Configuration example:

```

[databases]

mydb = host=localhost dbname=mydb

[pgbouncer]

pool_mode = transaction

max_client_conn = 100

```

2. **Built-in connection pooling** (PostgreSQL 14+):

- Simpler setup

- Less feature-rich than PgBouncer

3. **Application-level** (like HikariCP for Java):

- More control

- Language-specific

For most cases, PgBouncer in transaction mode offers the best balance."

}

]

}Step 3: The Actual Training

Using Google Colab (Free):

# This is real code that works!

# 1. Install necessary libraries

!pip install transformers datasets accelerate peft

# 2. Load the base model

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "meta-llama/Llama-2-7b-hf" # <Link href="/blog/best-local-ai-models-2025-complete-guide" className="text-cyan-300 hover:text-cyan-100 underline">Llama 2 model</Link> for fine-tuning

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# 3. Load your data

from datasets import load_dataset

dataset = load_dataset("json", data_files="your_training_data.json")

# 4. Configure training (LoRA for efficiency)

from peft import LoraConfig, get_peft_model

lora_config = LoraConfig(

r=16, # Rank - higher = more capacity

lora_alpha=32, # Scaling parameter

target_modules=["q_proj", "v_proj"], # What to train

lora_dropout=0.1 # Prevent overfitting

)

model = get_peft_model(model, lora_config)

# 5. Train!

from transformers import Trainer, TrainingArguments

training_args = TrainingArguments(

output_dir="./my-fine-tuned-model",

num_train_epochs=3,

per_device_train_batch_size=4,

learning_rate=2e-4,

logging_steps=10

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=dataset["train"],

tokenizer=tokenizer

)

trainer.train()

# 6. Save your specialized model

trainer.save_model("my-assistant")LoRA: The Method for Affordable Fine-tuning

LoRA (Low-Rank Adaptation) is like teaching with sticky notes:

Traditional Fine-tuning

- • Rewrite entire textbook

- • Expensive, time-consuming

- • Need lots of storage

LoRA Fine-tuning

- • Add sticky notes to textbook

- • Quick, cheap

- • Original book stays intact

- • Can remove/swap sticky notes

Real Numbers:

Full Fine-tuning of 7B model

- Memory needed: 28GB

- Storage: 13GB

- Training time: 20 hours

LoRA Fine-tuning of 7B model

- Memory needed: 8GB

- Storage: 20MB (just the sticky notes!)

- Training time: 2 hours

My Results: Before and After Fine-tuning

Before Fine-tuning (Generic LLaMA-7B)

After Fine-tuning (My PostgreSQL model)

1. Run EXPLAIN ANALYZE to see the actual execution plan

2. Look for Sequential Scans on large tables - add indexes:

CREATE INDEX CONCURRENTLY idx_name ON table(column);

3. Check statistics are current: ANALYZE table_name;

4. For complex queries, consider:

- Partial indexes for WHERE conditions

- Covering indexes for index-only scans

- Adjusting work_mem for sorts/hashes

5. Monitor with pg_stat_statements to find problem queries"

Fine-tuning for Different Purposes

Style Transfer

Domain Expertise

Company Voice

Language/Dialect

Common Fine-tuning Mistakes

Mistake 1: Too Little Data

Mistake 2: Inconsistent Format

Mistake 3: Overfitting to Specific Examples

DIY Fine-tuning Project

Build Your Own Writing Assistant

1. Pick Your Style:

- • Professional emails

- • Creative stories

- • Technical documentation

- • Social media posts

2. Collect 100 Examples:

- • Best examples of that style

- • Diverse topics

- • Consistent quality

3. Format Your Data:

4. Use Free Tools:

- • Google Colab for training

- • Hugging Face for hosting

- • Gradio for web interface

🎓 Key Takeaways

- ✓Fine-tuning is like tailoring - customize a pre-trained model for your specific needs

- ✓LoRA makes it affordable - 8GB RAM, 20MB storage, 2 hours instead of 28GB/13GB/20 hours

- ✓Real examples prove it works - 94% accuracy for medical diagnosis, 70% query resolution for customer service

- ✓Quality over quantity - 100-1000 good examples beat 10 poor ones

- ✓You can do this - weekend project, $0-10 cost, build your own AI assistant

❓Frequently Asked Questions About Fine-tuning

How much data do I need to fine-tune an AI model?

You need between 100-1000 high-quality examples for effective fine-tuning. Quality matters more than quantity - 500 well-formatted, diverse examples will perform better than 5000 inconsistent ones. The key is having examples that represent the specific style or knowledge you want to teach the model.

What's the difference between fine-tuning and training from scratch?

Fine-tuning adapts an existing pre-trained model (like customizing a suit), while training from scratch creates a completely new model (like weaving fabric from raw materials). Fine-tuning costs $0-100 and takes hours, while training from scratch costs millions and takes months. Fine-tuning is practical for most applications.

Can I fine-tune models on my own computer?

Yes! With LoRA (Low-Rank Adaptation), you can fine-tune 7B models on a standard laptop with 8GB RAM. Google Colab provides free GPU access for larger models. The key is using efficient techniques like LoRA instead of full fine-tuning, which would require expensive hardware.

How do I prevent my model from overfitting during fine-tuning?

Prevent overfitting by: (1) Using a separate validation set to monitor performance, (2) Implementing early stopping when validation performance degrades, (3) Using dropout layers in your LoRA configuration, (4) Limiting training epochs to 2-5 instead of training too long, and (5) Ensuring your training data is diverse and representative.

What are the most common use cases for fine-tuned models?

Popular use cases include: (1) Customer service bots trained on company data, (2) Medical diagnosis assistants trained on clinical reports, (3) Legal document analyzers trained on contracts, (4) Content creators with specific brand voices, (5) Translation models for specialized domains, and (6) Educational tutors for specific subjects.

🔗 Research & Further Reading

Explore these authoritative resources to deepen your understanding of AI fine-tuning:

LoRA: Low-Rank Adaptation of Large Language Models

Original research paper from Microsoft introducing LoRA

arxiv.orgHugging Face Fine-tuning Documentation

Comprehensive guides and examples for model fine-tuning

huggingface.coPyTorch Fine-tuning Tutorial

Step-by-step tutorial for computer vision models

pytorch.orgGoogle Colab Platform

Free GPU access for training your models

colab.research.google.comGoogle ML Crash Course

Fundamentals of machine learning concepts

developers.google.comTensorFlow Transfer Learning Guide

Official TensorFlow documentation on fine-tuning

tensorflow.orgInstruction Following Research

OpenAI's research on training models to follow instructions

openai.comDeepLearning.ai Courses

Andrew Ng's comprehensive machine learning courses

deeplearning.aiPapers with Code - Fine-tuning

Latest research papers and implementations

paperswithcode.com📚 Educational Resources & Compliance

Learning Objectives

- • Understand fine-tuning fundamentals and when to use it

- • Master LoRA technique for efficient model customization

- • Create personal AI assistants with real-world data

- • Avoid common fine-tuning mistakes and pitfalls

- • Apply fine-tuning to practical use cases

Chapter Information

Citation & References

This chapter references established research and practices in AI fine-tuning. Key sources include Microsoft's LoRA paper, Hugging Face documentation, and practical case studies from real-world implementations.

Was this helpful?

Related Guides

Continue your local AI journey with these comprehensive guides

Ready to Explore AI in Action?

In Chapter 10, discover the trade-offs between local and cloud AI - privacy, cost, power, and control!

Continue to Chapter 10