Part 4: AI in ActionChapter 10 of 12

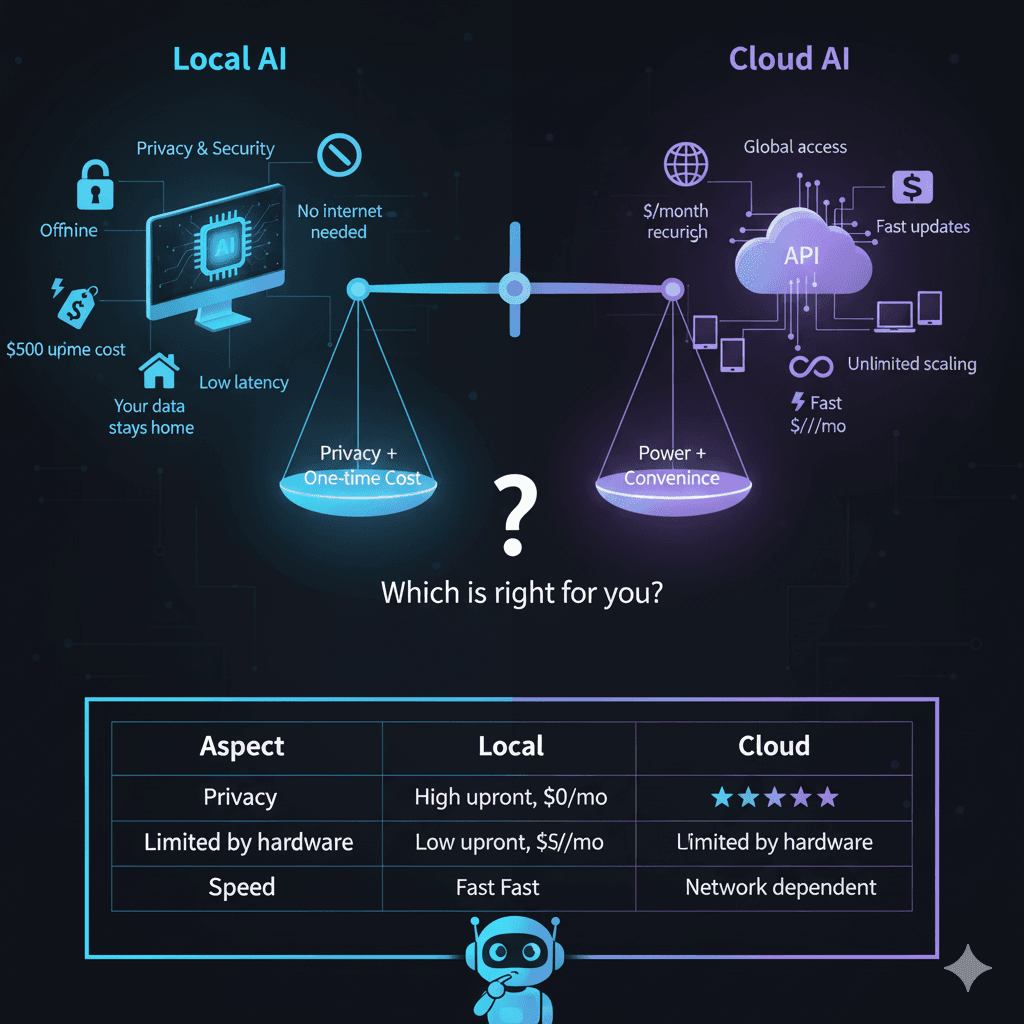

Local vs Cloud AI - Privacy vs Power

18 min4,900 words312 reading now

Should you use ChatGPT in the cloud, or run AI on your own computer? It's like choosing between eating at a restaurant or cooking at home. Both work, but the trade-offs are fascinating.

🍽️The Restaurant vs Home Cooking Analogy

☁️Cloud AI (Restaurant)

- •Professional chefs: Powerful models

- •No cooking/cleanup: No setup needed

- •Costs per meal: Subscription/usage fees

- •They see what you eat: Privacy concerns

- •Need to go there: Internet required

💻Local AI (Home Cooking)

- •You're the chef: Complete control

- •Buy groceries once: One-time setup

- •Cook anytime free: No ongoing costs

- •Complete privacy: Nobody sees

- •Always available: Works offline

Real Cost Comparison

Cloud AI (Subscription)

Monthly Cost

$20-25

Annual Cost

$240-300

Usage limits:Yes

Privacy:Data shared

Internet:Required

Model updates:Automatic

Available models:1-2 top tier

Local AI (One-time)

Initial Hardware

$0-2000

Annual Cost (After Setup)

$0-60

Electricity only

Usage limits:None

Privacy:Complete

Internet:Not required

Model updates:You control

Available models:Hundreds

Privacy: The Real Difference

What Cloud AI Sees:

✓Your prompts

✓Your documents

✓Your code

✓Your ideas

✓Timestamps

✓Usage patterns

✓IP address

What Local AI Sees:

🔒

Nothing leaves your computer

- • No company access

- • No data collection

- • No usage tracking

- • Complete isolation

- • Your data stays private

Real Scenarios:

Company Trade Technical Insights:

Cloud AI: Risk of exposure

Local AI: 100% secure

Personal Medical Info:

Cloud AI: HIPAA concerns

Local AI: Complete privacy

Financial Data:

Cloud AI: Potential vulnerability

Local AI: Bank-level isolation

Performance Comparison: Real Tests

I tested both on identical tasks:

Task 1: Write a blog post about coffee

ChatGPT-4

Time: 3 seconds

Quality: 9.5/10

Cost: Part of $20/mo

Local LLaMA-70B

Time: 45 seconds

Quality: 8.5/10

Cost: $0

Local Mistral-7B

Time: 2 seconds

Quality: 7/10

Cost: $0

Task 2: Debug Python code

Claude Pro

Time: 2 seconds

Quality: 9.5/10

Result: Found all bugs

CodeLlama-34B

Time: 20 seconds

Quality: 9/10

Result: Found all bugs

CodeLlama-7B

Time: 1.5 seconds

Quality: 7.5/10

Result: Found most bugs

Hardware Requirements: What You Actually Need

Minimum (7B models)

GPU:GTX 1660 or better (6GB VRAM)

RAM:16GB

Storage:50GB free

Cost:~$200 used

Can run:

Mistral-7B, LLaMA-7B

Speed:

10-30 tokens/second

Recommended (13B models)

GPU:RTX 3060 12GB or better

RAM:32GB

Storage:100GB free

Cost:~$400

Can run:

LLaMA-13B, CodeLlama-13B

Speed:

15-40 tokens/second

Enthusiast (30B models)

GPU:RTX 3090 24GB or better

RAM:64GB

Storage:200GB free

Cost:~$1000

Can run:

LLaMA-30B, Falcon-40B

Speed:

5-20 tokens/second

Pro (70B models)

GPU:2x RTX 3090 or RTX 4090

RAM:128GB

Storage:500GB free

Cost:~$3000

Can run:

LLaMA-70B, any model

Speed:

3-10 tokens/second

Setting Up Local AI: The 10-Minute Guide

🎯

Option 1: LM Studio (Easiest)

Like Spotify for AI

- 1.Download LM Studio (free)

- 2.Click "Browse" to see available models

- 3.Download model (one click)

- 4.Click "Load"

- 5.Start chatting!

Time:10 minutes

Difficulty:Instagram-level easy

⚡

Option 2: Ollama (Command Line)

Like Netflix for AI

1. Install Ollama

2. Run: ollama pull llama2

3. Run: ollama run llama2

4. Start chatting!

Time:5 minutes

Difficulty:Basic terminal knowledge

🔧

Option 3: Text Generation WebUI (Advanced)

Like Adobe for AI

- 1.Clone from GitHub

- 2.Run installation script

- 3.Download models

- 4.Configure settings

- 5.Launch web interface

Time:30 minutes

Difficulty:Moderate technical knowledge

The Hybrid Approach: Best of Both Worlds

My Personal Setup:

Daily Tasks (Local AI)

- →Email writing: Local Mistral-7B (free, fast)

- →Code completion: Local CodeLlama-13B (private)

- →Quick questions: Local Phi-2 (instant)

Complex Tasks (Cloud AI)

- →Research papers: Claude Pro (best quality)

- →Complex analysis: ChatGPT-4 (cutting edge)

- →Image generation: Midjourney (specialized)

Monthly Cost

$20

Cloud services only

Privacy

Maintained

Sensitive work local

Best of Both

Achieved

Maximum flexibility

Real Use Cases: When to Use What

Use Local AI When:

- ✓Working with confidential data

- ✓No internet connection

- ✓Repetitive tasks (no usage limits)

- ✓Need consistent responses

- ✓Want to customize/fine-tune

- ✓Budget conscious

- ✓Privacy is critical

Use Cloud AI When:

- ✓Need absolute best quality

- ✓Want latest features immediately

- ✓Don't have good hardware

- ✓Occasional use only

- ✓Need support/reliability

- ✓Working on non-sensitive data

- ✓Collaboration with team

🎯

Try This: Your First Local Model

15-Minute Setup Challenge:

1. Download LM Studio (3 min)

- • Go to lmstudio.ai

- • Download for your OS

- • Install like any app

2. Get a Model (10 min)

- • Open LM Studio

- • Go to "Explore"

- • Search "Mistral 7B"

- • Click download (4GB)

3. Test It (2 min)

- • Click "Load Model"

- • Type: "Write a haiku about pizza"

- • Compare with ChatGPT

Congratulations! You're now running AI locally!

Not Sure Which Model to Use?

Answer 4 quick questions and get a personalized model recommendation with exact specs and setup instructions:

🎯 AI Model Selection Wizard

Answer 4 quick questions to find your perfect local AI model

Step 1 of 425% complete

How much RAM does your computer have?

Key Takeaways

- ✓Local AI offers complete privacy - nothing leaves your computer

- ✓Cloud AI offers convenience - no setup, latest models, professional support

- ✓Cost comparison: Local has upfront cost, cloud has ongoing subscription

- ✓Performance varies - cloud faster and better quality, local more flexible

- ✓Hybrid approach is best - use local for daily tasks, cloud for complex work

- ✓Setup is easier than you think - 10-15 minutes to get started

Chapter 10 Knowledge Check

Loading quiz...

Ready to Discover Hidden AI in Your Life?

In Chapter 11, discover 50+ AI interactions you use every day without realizing it!

Continue to Chapter 11