AI Ethics & Bias - The Uncomfortable Truth

The Shopping Mall Security Camera Story

Imagine a security camera in a mall that's been trained to identify "suspicious behavior." After a year, data shows it flagged:

- • 70% teenagers wearing hoodies (mostly Black and Hispanic)

- • 5% adults in business suits carrying briefcases

- • 15% people with disabilities moving "unusually"

- • 10% elderly people resting on benches

The AI wasn't programmed to be racist or ableist. But the training data came from security guards who had their own biases. The AI learned and amplified these biases.

⚠️ What is AI Bias Really?

AI bias = When AI systems make unfair decisions that favor or discriminate against certain groups

💥 Real-World AI Bias Disasters

1. Amazon's Hiring AI (2018)

What happened: AI rejected women's resumes

Why: Trained on 10 years of hiring data (mostly men hired)

Result: Penalized resumes with "women's" (like women's chess club)

Outcome: Amazon scrapped the entire system

2. Healthcare AI Bias

What happened: AI gave white patients priority for care

Why: Used healthcare spending as proxy for health needs

Result: Black patients needed to be much sicker to get same care

Impact: Affected 200 million Americans

3. Face Recognition Failures

Error rates by demographic:

• White men: 1% error

• White women: 7% error

• Black men: 12% error

• Black women: 35% error

Real impact: Innocent people arrested due to false matches

4. Criminal Justice AI

COMPAS system (predicting reoffense):

• Falsely labeled Black defendants high-risk 2x more than white

• White defendants mislabeled as low-risk 2x more than Black

Used in courts across America for sentencing decisions

🔍 Where Bias Comes From

1. Historical Bias in Data

Past: "Only men are engineers" (1970s data)

AI learns: "Engineers = Men"

Future: AI rejects women engineer applicants

2. Representation Bias

Training data: 80% white faces, 20% others

AI performance: Great for white faces, terrible for others

Real world: Misidentifies non-white people constantly

3. Measurement Bias

Measuring: "Good employee" by hours in office

Misses: Remote productivity, quality over quantity

Discriminates against: Parents, caregivers, disabled workers

4. Aggregation Bias

Problem: One-size-fits-all model

Example: Medical AI trained on adults fails for children

Reality: Different groups need different approaches

📈 The Bias Multiplication Effect

biased human decision

→ Affects dozens

biased AI system

→ Affects millions

Speed of bias spread:

Human

Months to years

AI

Milliseconds

🔎 How to Identify Bias (The Detective Work)

The Fairness Test Questions:

- 1.Who's affected? List all groups that will interact with your AI

- 2.Who's missing? Check who's NOT in your training data

- 3.Who benefits? See who gets positive outcomes most

- 4.Who's harmed? Find who gets negative outcomes most

- 5.Who decided? Look at who built the system

Red Flags to Watch For:

✅ Real Solutions That Work

1. Diverse Data Collection

2. Bias Audits (Regular Health Checks)

Before launch

Test on all demographics

Monthly

Check for drift

Quarterly

Full bias audit

Annually

Complete review with external auditors

3. Diverse Teams

Study shows:

4. Algorithmic Corrections

Pre-processing

Clean biased data before training

In-processing

Add fairness constraints during training

Post-processing

Adjust outputs to be fair

🔮 The Glass Box Approach

Instead of "black box" AI:

User asks: "Why was I rejected for the loan?"

Black box AI:

"Algorithm says no"

Glass box AI:

"Your application was declined because:

- • Credit score: 650 (minimum 680)

- • Debt-to-income: 45% (maximum 40%)

- • Employment history: 8 months (minimum 12)

You can improve by: [specific steps]"

Try This: Spot the Bias

Look at this dataset for "ideal employee" AI:

1. Who will this AI discriminate against?

Women, older workers, parents, remote workers, people with work-life balance

2. What biases will it learn?

"Good employee = young male without kids who overworks in an office"

3. How would you fix it?

Balance dataset, redefine success metrics, include diverse work styles, measure output not hours, represent remote workers

🎯 What You Can Do Today

As a User:

- 1.Question AI decisions affecting you

- 2.Report suspected bias

- 3.Demand transparency

- 4.Support diverse AI teams

As a Developer:

- 1.Use bias detection tools

- 2.Include diverse voices

- 3.Document limitations

- 4.Build appeals into systems

As a Business:

- 1.Audit your AI systems

- 2.Hire diverse teams

- 3.Be transparent with users

- 4.Take responsibility for outcomes

As a Citizen:

- 1.Support AI regulation

- 2.Educate others

- 3.Vote for responsible AI policies

- 4.Join AI ethics organizations

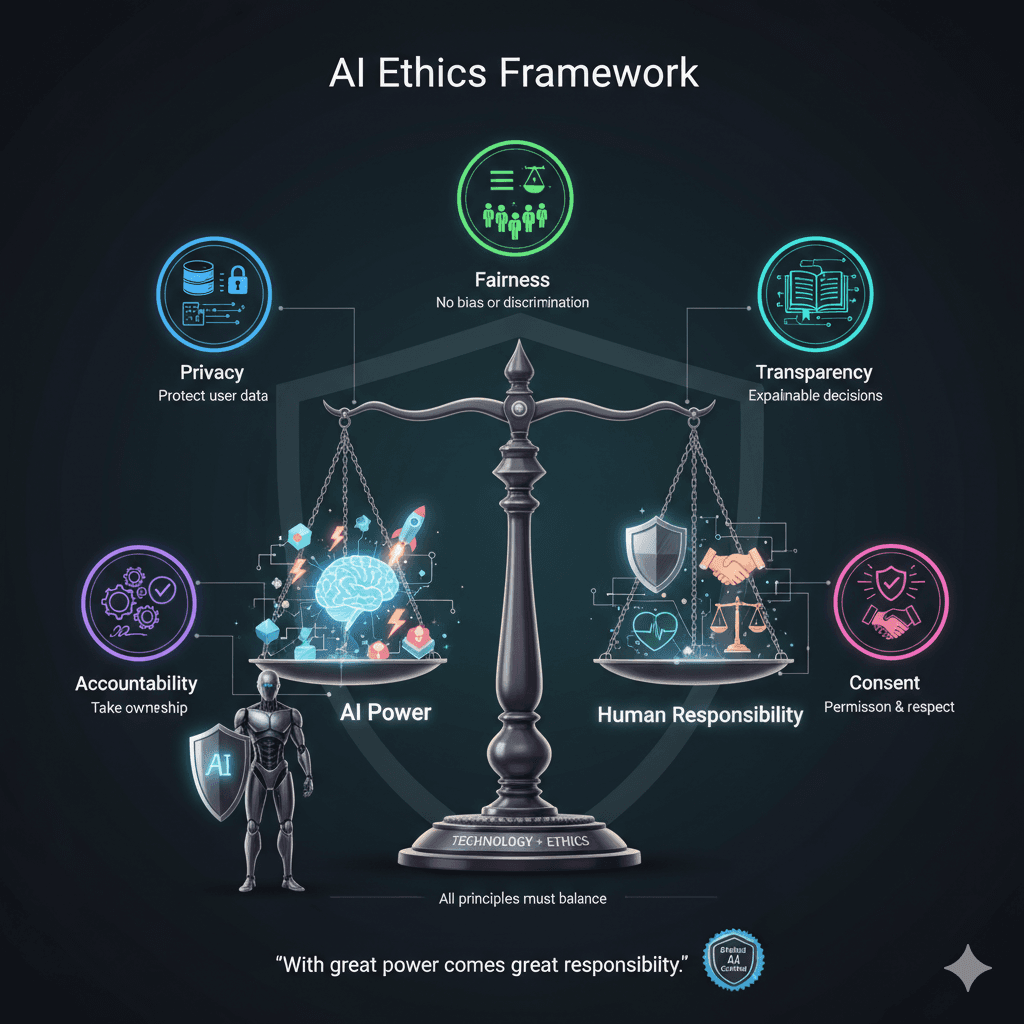

Key Takeaways

- ✓AI bias is not intentional but is real and harmful - it comes from training data and design choices

- ✓Bias comes from data, design, and deployment decisions - every step matters

- ✓Perfect fairness is impossible but improvement is essential - we must make tradeoffs transparent

- ✓Diversity in teams and data is crucial - diverse perspectives catch more bias

- ✓Transparency and accountability are non-negotiable - users deserve explanations

- ✓Everyone has a role in creating fair AI - from users to developers to citizens

"The question is not whether AI will be biased, but whose bias it will reflect and whether we can make it fair enough to benefit everyone."

Chapter Information

Academic Details

- Learning Objectives: Understand AI bias types, detection methods, and mitigation strategies

- Difficulty Level: Intermediate

- Prerequisites: Basic understanding of AI concepts

- Time Investment: 45 minutes reading + 30 minutes activities

Sources & Citations

- Primary Sources: MIT Media Lab, Science Magazine, Nature

- Case Studies: Amazon Hiring AI, Healthcare Bias, Criminal Justice

- Industry Guidelines: UK Government, White House AI Bill of Rights

- Last Updated: October 2024

External Resources & Further Reading

Academic Research

- "Gender Shades" - Joy Buolamwini, MIT Media LabSeminal work on facial recognition bias

- "Dissecting racial bias in an algorithm" - Science MagazineHealthcare bias research study

- "Universal representation learning" - NatureAdvances in fair AI representation

Industry Guidelines

- UK Government AI Ethics GuidelinesOfficial guidance on ethical AI deployment

- White House AI Bill of RightsUS framework for AI rights and protections

- Algorithmic Justice LeagueOrganization fighting algorithmic bias

Coming Up Next: Environmental Impact

Discover the hidden environmental cost of AI - from carbon footprints to water consumption. Learn what every AI query really costs our planet and what we can do about it.

Continue to Chapter 14