Speaking AI's Language - How Computers Read Text

Updated: October 28, 2025

Computers only understand numbers. So how do they read "Hello, world"?

It's like translating English to Morse code, but more complex. Let me show you exactly how AI converts your words into numbers it can understand.

🧠 Linguistic Foundation: Tokenization is fundamental tonatural language processingand was pioneered by researchers atGoogle andOpenAI. The Byte Pair Encoding (BPE) algorithm we'll explore is documented inNeural Machine Translation of Rare Words with Subword Units(Sennrich et al., 2015).

🔗 Building on Previous Chapters: Now that you understandAI basics,machine learning,Transformer architecture, andAI model sizes, we're ready to explore how AI actually processes text.

🔢The Translation Problem

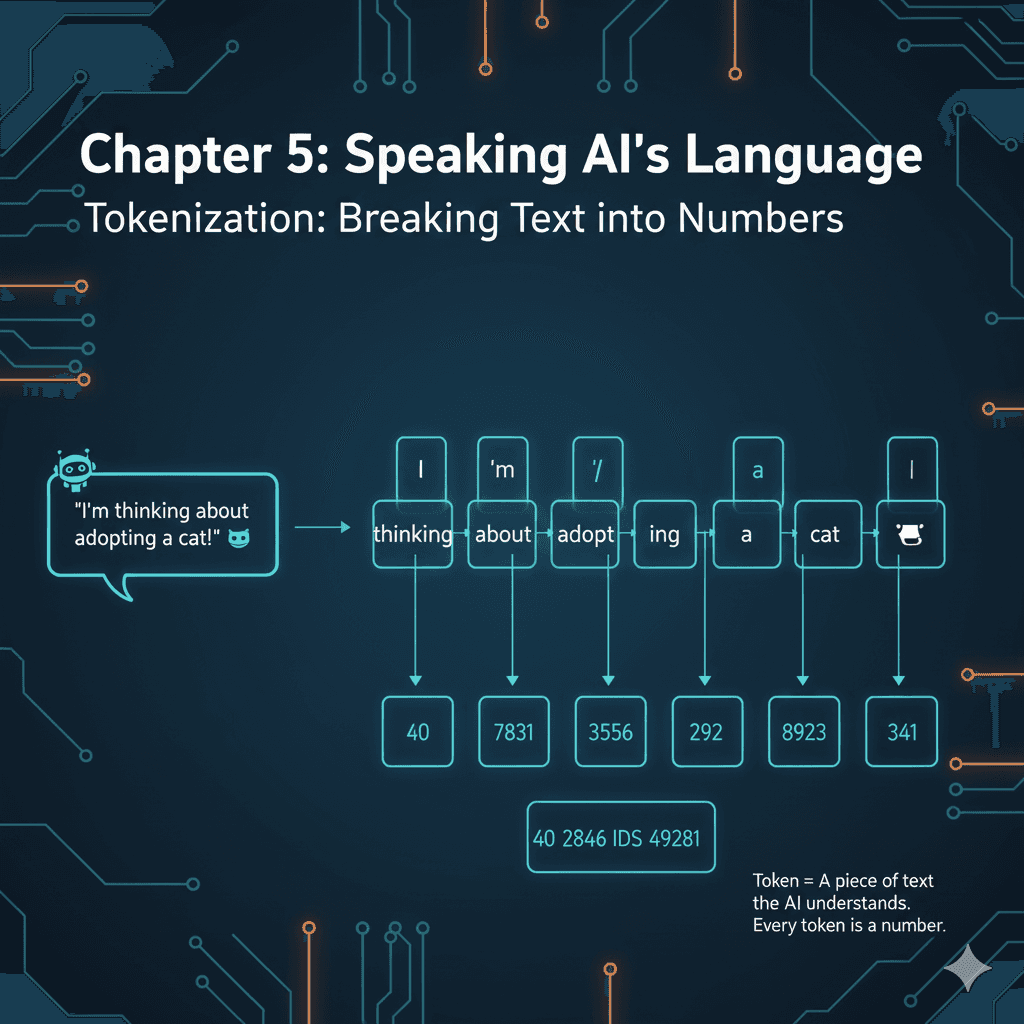

Every word, every letter, every space gets converted to numbers. This process is called tokenization.

Tokenization: Breaking Words into Pieces

AI doesn't always see whole words. It breaks text into "tokens" - like syllables:

Why Not Just Use Letters?

You might wonder: why not just use A=1, B=2, etc.?

The Problem with Letters

The Token Solution

The Dictionary: AI's Vocabulary

Every AI model has a vocabulary - typically 30,000 to 50,000 tokens:

Token Dictionary Example

Real Example: How ChatGPT Sees Your Message

You type:

ChatGPT sees:

The Power of Conlabel: Word Embeddings

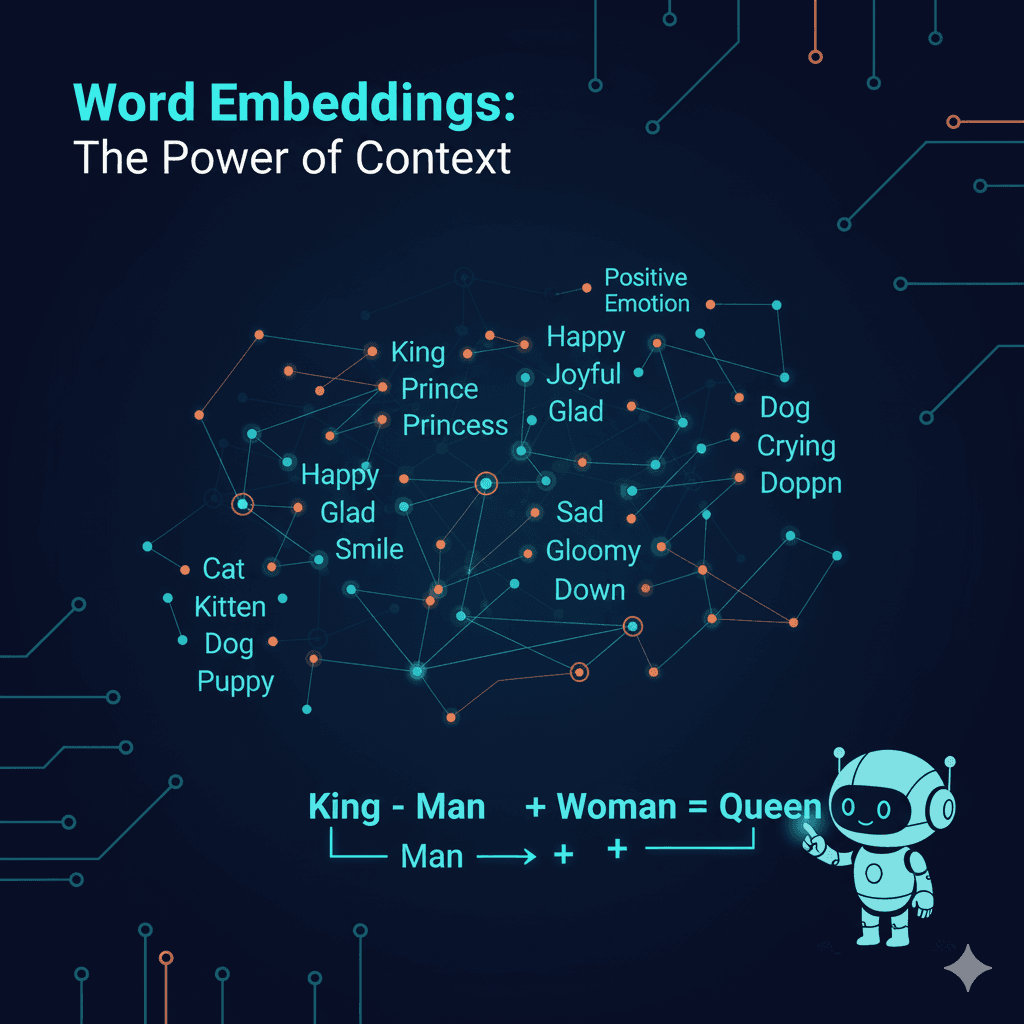

Here's where it gets interesting. Each token isn't just a number - it's a location in "meaning space":

🗺️ Vector Space Research: Word embeddings were pioneered byGoogle's word2vec team and further developed in"Efficient Estimation of Word Representations in Vector Space"(Mikolov et al., 2013). The famous "King - Man + Woman = Queen" example was first demonstrated in this research, showing how semantic relationships can be captured mathematically.

Imagine a Map of Words

Close together (similar meanings):

- • "King" and "Queen" are neighbors

- • "Happy" and "Joyful" are near each other

- • "Cat" and "Kitten" are close

Far apart (different meanings):

- • "King" and "Bicycle" are distant

- • "Happy" and "Thermometer" are far

- • "Cat" and "Mathematics" are separated

The Famous Word Math

This actually works in AI:

How? Because words are stored as coordinates in space!

Languages: Same Concepts, Different Tokens

But in meaning space, they all occupy similar positions! This is why AI can translate between languages.

Special Tokens: AI's Stage Directions

AI uses special tokens like stage directions in a play:

The Tokenization Challenge: Different Languages

English is relatively easy. Other languages are harder:

| Language | Word | Token Count |

|---|---|---|

| English | "Hello" | 1 token |

| Chinese | "你好" | 2-3 tokens |

| Arabic | "مرحبا" | 3-4 tokens |

| Emoji | "😀" | 1 token |

| Code | "function()" | 2-3 tokens |

This is why AI sometimes struggles with non-English languages - they need more tokens for the same meaning!

Hands-On: Play with Tokenization

🛠️ Practical Tools: The OpenAI Tokenizer we'll use is an implementation of theGPT-2 tokenizerusing Byte Pair Encoding. You can also explore other tokenizers likeHugging Face Tokenizerswhich provide implementations for various languages and models.

Try This Tokenizer Experiment:

1. Go to: OpenAI Tokenizer (free online tool)

Search for "OpenAI Tokenizer" to find the tool

2. Type: "Hello world"

See: 2 tokens

3. Type: "Supercalifragilisticexpialidocious"

See: Gets broken into chunks

4. Type: "你好世界" (Hello world in Chinese)

See: More tokens than English

5. Type: Some emojis

See: Each emoji is usually 1 token

Interesting Discoveries:

- • Spaces matter! "Hello" vs " Hello" are different tokens

- • Capital letters often create new tokens

- • Common words = fewer tokens

- • Rare words = broken into pieces

Why This Matters for You

Understanding tokens helps you:

Write better prompts

Shorter tokens = faster responses

Understand costs

APIs charge per token

Debug weird behavior

Sometimes AI splits words oddly

Optimize performance

Fewer tokens = better performance

Frequently Asked Questions

How does AI read text in simple terms?

AI reads text by converting words and characters into numbers through a process called tokenization. Think of it like translating English into Morse code, but more complex. AI breaks text into 'tokens' (pieces of words) and assigns each token a unique number. For example, 'Hello, world!' might become [15496, 18435, 37]. These numbers are then processed by AI models to understand meaning.

What is tokenization in AI for beginners?

Tokenization is how AI breaks text into smaller pieces it can understand. Instead of using whole words, AI often breaks words into syllables or meaningful chunks. For example, 'understanding' might become ['under', 'stand', 'ing']. Each of these chunks gets assigned a unique number that the AI can process. This helps AI handle words it has never seen before by breaking them into familiar pieces.

What are word embeddings and how do they work?

Word embeddings are like giving each word coordinates in a 'meaning space.' Words with similar meanings are located close to each other, while words with different meanings are far apart. This is why AI can do amazing 'word math' like King - Man + Woman = Queen. Each word becomes a set of numbers (coordinates) that represent its meaning, allowing AI to understand relationships between words and concepts.

Why does King - Man + Woman = Queen work in AI?

This works because words are stored as coordinates in meaning space. When you subtract the coordinates for 'Man' from 'King', you get the concept of 'royalty minus male.' When you add the coordinates for 'Woman,' you get 'royalty plus female,' which is exactly where 'Queen' is located in the meaning space. This mathematical relationship exists because AI learns these patterns by analyzing billions of text examples and understanding how words relate to each other.

Can I try AI tokenization myself?

Yes! You can try the OpenAI Tokenizer (a free online tool) to see how AI breaks text into tokens. Type in different words, sentences, and even emojis to see how many tokens they use. Try long words like 'supercalifragilisticexpialidocious' to see how they get broken into chunks, or type text in different languages to see how token counts vary. This hands-on experience helps understand how AI processes text.

Was this helpful?

Related Guides

Continue your local AI journey with these comprehensive guides

📚 Author & Educational Resources

About This Chapter

Written by the LocalAimaster Research Team educational team with expertise in natural language processing, computational linguistics, and AI language understanding technologies.

Last Updated: 2025-10-28

Reading Level: High School (Grades 9-12)

Prerequisites: Chapters 1-4: Understanding AI basics, machine learning, Transformer architecture, and model sizes

Target Audience: High school students, developers, linguists interested in AI language processing

Learning Objectives

- •Understand how AI converts text to numbers through tokenization

- •Learn why tokenization breaks words into meaningful pieces

- •Explore word embeddings and semantic meaning spaces

- •Discover how AI can perform "word math" operations

- •Experience hands-on tokenization with online tools

📖 Authoritative Sources & Further Reading

Research Papers:

Practical Resources:

🎓 Key Takeaways

- ✓Computers only understand numbers - text must be converted to tokens

- ✓Tokenization breaks words into pieces - not always full words

- ✓Embeddings create meaning space - similar words are close together

- ✓Word math actually works - King - Man + Woman = Queen

- ✓Languages use different token counts - English is more efficient

- ✓Tokens matter for cost and performance - understand them to optimize

Ready to Understand Neural Networks?

In Chapter 6, discover how neural networks work through simple Lego block analogies. Learn the AI brain architecture!

Continue to Chapter 6