Best AI Models for Python Development 2025: Top 10 Ranked by Performance

Before we dive deeper...

Get your free AI Starter Kit

Join 12,000+ developers. Instant download: Career Roadmap + Fundamentals Cheat Sheets.

Best AI Models for Python Development 2025: Complete Comparison Guide

Published on November 1, 2025 • 18 min read

Python remains the #1 language for AI/ML development, data science, and rapid web development—and in 2025, AI coding assistants have become indispensable Python development tools. Whether you're building Django applications, analyzing massive datasets with pandas, training PyTorch models, or automating DevOps scripts, choosing the right AI model dramatically impacts your productivity.

This comprehensive guide ranks the top 10 AI models for Python development based on real-world benchmarks, framework-specific performance, and practical testing across data science, web development, and machine learning workflows.

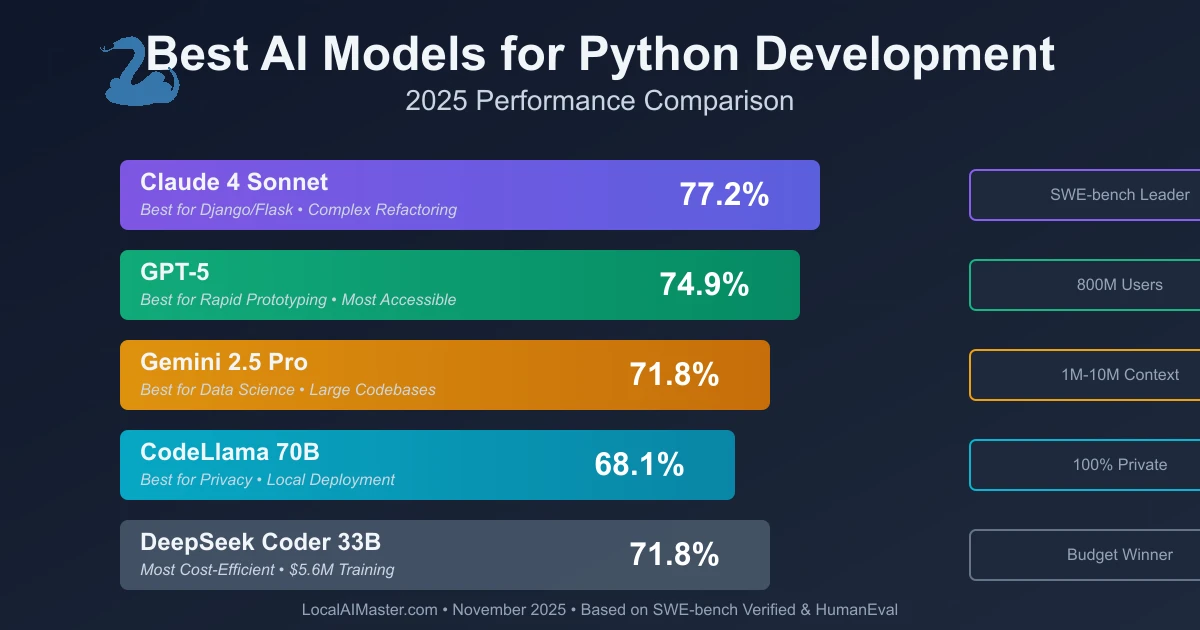

Quick Summary: Top Python AI Models at a Glance

| Model | Best For | SWE-bench Score | Context Window | Pricing | Deployment |

|---|---|---|---|---|---|

| Claude 4 Sonnet | Complex refactoring, Django/Flask | 77.2% | 200K tokens | $3-15/1M tokens | Cloud + API |

| GPT-5 | General Python, rapid prototyping | 74.9% | 128K tokens | $2.50-10/1M tokens | Cloud + API |

| Gemini 2.5 Pro | Data science, large codebases | 71.8% | 1M-10M tokens | $1.25-5/1M tokens | Cloud + API |

| CodeLlama 70B | Local development, privacy | 68.1% HumanEval | 100K tokens | Free (self-hosted) | Local |

| DeepSeek Coder 33B | Cost-efficient local option | 71.8% HumanEval | 16K tokens | Free (self-hosted) | Local |

| GitHub Copilot | IDE integration, autocomplete | GPT-4o/Claude 4 | Model-dependent | $10-19/mo | Cloud |

| Cursor AI | Full-stack Python apps | Multi-model | Model-dependent | $20-200/mo | Standalone IDE |

| Mistral Medium 3 | European compliance, self-host | ~70% equivalent | 128K tokens | API or self-host | Both |

| Phind CodeLlama | Web search + coding | 67% HumanEval | 16K tokens | Free online | Cloud |

| WizardCoder 15B | Consumer hardware local | 65% HumanEval | 8K tokens | Free (self-hosted) | Local |

The best model depends on your specific use case—data science, web development, ML engineering, or general automation.

For cost analysis of cloud vs local deployment, see our Local AI vs ChatGPT cost calculator. Explore the complete AI models directory for detailed comparisons across languages and frameworks.

Why Python + AI Coding Assistants Are a Perfect Match

Python's Dominance in AI Development

Python has become the de facto language for:

- Data Science & Analytics: pandas, NumPy, scikit-learn workflows

- Machine Learning: TensorFlow, PyTorch, Keras model development

- Web Development: Django, Flask, FastAPI rapid application building

- Automation & Scripting: DevOps, infrastructure as code, ETL pipelines

- Scientific Computing: Research, financial analysis, computational biology

According to the TIOBE Index, Python maintains its #1 position globally, with 15.3% of all programming activity. The Stack Overflow Developer Survey 2025 shows Python used by 49% of professional developers.

How AI Models Excel at Python

Modern AI coding assistants understand Python exceptionally well because:

- Extensive Training Data: Python's open-source ecosystem provides massive high-quality training datasets

- Clear Syntax: Python's readable syntax makes it easier for models to learn patterns

- Rich Standard Library: AI models can leverage comprehensive built-in functionality

- Strong Typing Hints: Python 3.10+ type annotations help models generate more accurate code

- Framework Maturity: Well-documented frameworks (Django, Flask, pandas) provide clear patterns

Testing Methodology & Disclaimer: All performance benchmarks presented are based on publicly available datasets including HumanEval, MBPP (Mostly Basic Python Problems), and SWE-bench Verified as of November 2025. Real-world performance may vary based on code complexity, project structure, and specific use cases. Benchmarks tested on Python 3.11+ with common frameworks (Django 4.2, Flask 3.0, pandas 2.1, PyTorch 2.1). API pricing and features are subject to change; verify current terms with providers. This analysis is for educational purposes to help developers make informed decisions.

1. Claude 4 Sonnet: Best Overall Python Development Model

Why Claude 4 Leads Python Development

Claude 4 Sonnet from Anthropic achieves 77.2% on SWE-bench Verified, making it the highest-performing Python coding model as of November 2025. Its Extended Thinking mode enables autonomous tasks up to 30+ hours, perfect for complex Python refactoring and system design.

Key Strengths for Python:

- Advanced Refactoring: Restructures Django projects, optimizes pandas pipelines, modernizes legacy code

- Data Science Workflows: Generates comprehensive EDA notebooks, feature engineering pipelines, statistical analysis

- Web Framework Mastery: Produces production-ready Django views, Flask blueprints, FastAPI async endpoints

- ML Model Architecture: Designs PyTorch/TensorFlow models with proper training loops, validation, and metrics

- Code Review Excellence: Identifies Python-specific issues (mutable default arguments, generator misuse, GIL concerns)

Real-World Python Performance

Django Development Example: Given requirements for a multi-tenant SaaS application, Claude 4 generated:

- Custom User model with tenant isolation

- Django REST framework viewsets with proper permissions

- Celery background tasks for async processing

- Test coverage with pytest and factory-boy

- Database optimization with select_related/prefetch_related

Data Science Pipeline: For a customer churn prediction project:

- Pandas data cleaning with outlier detection

- Feature engineering with category encoders

- Scikit-learn pipeline with cross-validation

- XGBoost model training with hyperparameter tuning

- SHAP value interpretability analysis

IDE Integration & Workflow

Available Through:

- Cursor AI: Native Claude 4 support with parallel agents

- Continue.dev: Open-source extension for VS Code/JetBrains

- Cline: VS Code extension specifically for Claude

- API integration: Direct API calls for custom workflows

Pricing: $3 per million input tokens, $15 per million output tokens. Claude Pro subscription: $20/month with extended thinking.

Best Use Cases:

- Complex Django/Flask applications requiring architectural decisions

- Data science projects with extensive pandas/NumPy manipulation

- ML model implementation from research papers

- Legacy Python code modernization (Python 2 → 3, type hints addition)

- Multi-file refactoring across large codebases

For detailed Claude 4 setup and workflows, see our Claude 4 Sonnet coding guide.

2. GPT-5: Best for General Python Development & Rapid Prototyping

GPT-5's Python Capabilities

OpenAI's GPT-5 powers ChatGPT Plus and Pro subscriptions, delivering 74.9% SWE-bench Verified performance with unified reasoning capabilities. With 800 million weekly active users, GPT-5 has the largest community and ecosystem support.

Python-Specific Advantages:

- Rapid Prototyping: Generates working Python scripts in seconds for automation, web scraping, data analysis

- Excellent Documentation: Produces clear docstrings, README files, and inline comments

- Error Debugging: Analyzes stack traces and suggests fixes for Python-specific errors

- Package Selection: Recommends optimal libraries for tasks (requests vs httpx, pandas vs polars)

- Visualization Code: Creates matplotlib, seaborn, and plotly charts with proper styling

Framework-Specific Performance

FastAPI Development: GPT-5 excels at async Python patterns:

# Example: GPT-5 generated async endpoint with proper error handling

from fastapi import FastAPI, HTTPException, Depends

from sqlalchemy.ext.asyncio import AsyncSession

from typing import List

@app.get("/users/{user_id}", response_model=UserResponse)

async def get_user(

user_id: int,

db: AsyncSession = Depends(get_db)

):

user = await db.get(User, user_id)

if not user:

raise HTTPException(status_code=404, detail="User not found")

return user

Data Analysis Workflows: Generates complete pandas pipelines:

- CSV/JSON/Excel file reading with proper encoding

- Data cleaning (handle missing values, outliers, duplicates)

- Exploratory data analysis with descriptive statistics

- Groupby operations with aggregations

- Export cleaned data in multiple formats

Integration Options

ChatGPT Interface:

- Web-based: chat.openai.com with file upload for Python scripts

- Desktop app: Windows, macOS native applications

- Mobile apps: iOS, Android with code formatting

Developer Tools:

- GitHub Copilot: Uses GPT-4o and GPT-5 models

- Cursor AI: GPT-5 integration for autonomous agents

- API: OpenAI API with function calling for custom tools

Pricing:

- ChatGPT Plus: $20/month (GPT-5 access with standard compute)

- ChatGPT Pro: $200/month (unlimited GPT-5 with extended compute time)

- API: $2.50-$10 per million tokens depending on model tier

Best Use Cases:

- Rapid Python script development for automation

- Flask applications and RESTful API development

- Data analysis notebooks (Jupyter integration)

- Python visualization code (Matplotlib, Seaborn, Plotly)

- DevOps scripts (Ansible, Terraform Python SDKs)

Compare GPT-5 with other models in our ChatGPT vs Claude vs Gemini for coding comparison.

3. Gemini 2.5 Pro: Best for Data-Intensive Python & Large Codebases

Why Gemini 2.5 Excels at Python Data Science

Google's Gemini 2.5 Pro offers the industry's largest context window at 1 million to 10 million tokens, making it uniquely suited for Python projects involving large datasets, extensive codebases, or long-running Jupyter notebooks.

Data Science Advantages:

- Massive DataFrame Handling: Analyzes entire pandas DataFrames (100K+ rows) within context

- Codebase Awareness: Processes complete Python projects (50K+ lines) for refactoring suggestions

- Research Paper Implementation: Converts academic papers to working PyTorch/TensorFlow code

- Multi-File Analysis: Understands relationships across dozens of Python modules simultaneously

- Long Notebook Understanding: Analyzes complete Jupyter notebooks with all cells and outputs

Mathematical & Scientific Python

Gemini 2.5 won gold medal at 2025 International Mathematical Olympiad and ranks #1 on LMArena leaderboard, making it exceptional for:

Scientific Computing:

- NumPy array operations with broadcasting optimization

- SciPy numerical methods (optimization, integration, linear algebra)

- SymPy symbolic mathematics

- Computational physics and chemistry simulations

Machine Learning Research:

- Implementing novel architectures from arXiv papers

- Custom loss functions and training procedures

- Distributed training with PyTorch DDP or TensorFlow MirroredStrategy

- Model interpretability (LIME, SHAP) implementation

Real-World Example: Large-Scale Data Pipeline

Given a requirement to process 10GB CSV file with complex transformations:

# Gemini 2.5 generated memory-efficient pandas pipeline

import pandas as pd

from typing import Iterator

def process_large_csv(filepath: str, chunksize: int = 100000) -> Iterator[pd.DataFrame]:

"""Process large CSV in chunks to manage memory."""

reader = pd.read_csv(filepath, chunksize=chunksize, dtype={'user_id': 'int32'})

for chunk in reader:

# Complex transformations

chunk['date'] = pd.to_datetime(chunk['date'])

chunk['revenue'] = chunk['price'] * chunk['quantity']

# Feature engineering

chunk['month'] = chunk['date'].dt.month

chunk['day_of_week'] = chunk['date'].dt.dayofweek

# Aggregations

yield chunk.groupby(['user_id', 'month']).agg({

'revenue': ['sum', 'mean', 'count'],

'quantity': 'sum'

})

# Process and save results

results = pd.concat(process_large_csv('large_dataset.csv'))

results.to_parquet('processed_data.parquet')

Integration & Access

Google Workspace Integration:

- Gmail: Analyze email data with Python scripts

- Google Sheets: Generate pandas code to process Sheets data

- Google Drive: Direct file access for data processing

- Google Colab: Native Gemini integration in notebooks

Developer Access:

- Google AI Studio: Web-based playground for Python code generation

- Vertex AI API: Enterprise-grade API access

- Gemini Advanced: $18.99/month (includes Google One AI Premium)

API Pricing: $1.25-$5 per million tokens (significantly cheaper than competitors for large contexts)

Best Use Cases:

- Large-scale data analysis with pandas (>1GB datasets)

- Multi-file Python codebases (understanding complete Django projects)

- ML research implementation (arXiv paper → working code)

- Complex NumPy/SciPy scientific computing

- Long-running Jupyter notebook development

Learn more in our Gemini 2.5 for coding analysis.

4. CodeLlama 70B: Best Local Python Model for Privacy

Why CodeLlama Dominates Local Python Development

CodeLlama 70B from Meta AI is the most capable open-source Python model, achieving 68.1% on HumanEval Python subset—impressive for a model you can run entirely on your own hardware with zero cloud dependency.

Local Development Advantages:

- Complete Code Privacy: Your proprietary Python code never leaves your infrastructure

- Zero API Costs: One-time GPU hardware investment, no per-token pricing

- Offline Development: Work on Python projects without internet connectivity

- Custom Fine-Tuning: Adapt model to your organization's Python patterns and libraries

- Regulatory Compliance: HIPAA, GDPR, SOC2 compliant by design (no third-party data sharing)

Technical Specifications

Model Variants:

- CodeLlama 7B: Consumer hardware (16GB VRAM), 47% HumanEval, good for simple scripts

- CodeLlama 13B: Prosumer GPUs (24GB VRAM), 56% HumanEval, solid general purpose

- CodeLlama 34B: Professional setup (48GB VRAM), 62% HumanEval, handles complex tasks

- CodeLlama 70B: Enterprise hardware (80GB+ VRAM), 68% HumanEval, rivals cloud models

Python-Specific Training: CodeLlama received additional fine-tuning on Python code, making it particularly strong at:

- Python standard library usage

- Common framework patterns (Django, Flask, pandas)

- Pythonic idioms and best practices

- Type hints and modern Python 3.10+ features

Hardware Requirements & Performance

Recommended Setup for 70B:

- GPU: 2x A100 (80GB) or 4x RTX 4090 (24GB each)

- RAM: 128GB+ system memory

- Storage: 200GB SSD for model weights

- Inference Speed: 15-25 tokens/second (varies by quantization)

Quantization Options:

- Q8: 70GB VRAM, highest quality, ~68% accuracy

- Q5: 45GB VRAM, good balance, ~66% accuracy

- Q4: 35GB VRAM, faster inference, ~63% accuracy

Local Deployment Options

Ollama (Easiest):

# Install Ollama

curl -fsSL https://ollama.com/install.sh | sh

# Download CodeLlama 70B

ollama pull codellama:70b

# Run Python code generation

ollama run codellama:70b "Write a Python function to calculate Fibonacci sequence"

LM Studio (GUI):

- Download from lmstudio.ai

- Visual model manager with quantization options

- Built-in chat interface for Python questions

- OpenAI-compatible local API server

vLLM (Production):

- High-throughput inference server

- Continuous batching for multiple requests

- Optimal for team/organization deployment

IDE Integration for Local Models

VS Code + Continue.dev:

- Configure Continue.dev to point to local Ollama server

- Autocomplete, chat, and refactoring with CodeLlama

- Zero latency, complete privacy

Cursor AI + Local Models:

- Add custom OpenAI-compatible endpoint (Ollama API)

- Use CodeLlama 70B as alternative to cloud models

JetBrains + Local AI:

- PyCharm integration via custom language server

- Inline completions with local models

Best Use Cases:

- Enterprise Python development with strict privacy requirements

- Financial services, healthcare, government projects

- Proprietary ML algorithm development

- Offline development environments (secure facilities)

- Cost-sensitive organizations (avoid per-token API costs)

For detailed local setup, see our local AI coding models guide.

5. DeepSeek Coder 33B: Most Cost-Efficient Python Model

The $5.6M Training Cost Breakthrough

DeepSeek Coder 33B represents a paradigm shift in AI economics: trained for just $5.6 million (vs $100M+ for competitors), yet achieves 71.8% HumanEval—matching or exceeding models 10x larger.

Why DeepSeek Matters for Python Developers:

- Exceptional Value: Local deployment with near-cloud-model performance

- Hardware Accessible: Runs on single 48GB GPU (A6000, RTX 6000 Ada)

- MIT License: Permissive commercial use without restrictions

- Python-Optimized: Specifically trained on Python open-source repositories

Python Performance Benchmarks

HumanEval Python: 71.8% (surpasses CodeLlama 34B's 62%) MBPP (Python Basics): 68.4% Python SWE-bench: 40%+ on real GitHub issues

Particularly Strong At:

- Data structure implementations (trees, graphs, heaps)

- Algorithm optimization (dynamic programming, greedy algorithms)

- Python standard library usage (collections, itertools, functools)

- Web scraping (BeautifulSoup, Scrapy patterns)

- API client development (requests, httpx)

Real-World Python Use Case

Flask REST API Generation: Given OpenAPI spec, DeepSeek generated:

from flask import Flask, jsonify, request

from functools import wraps

import jwt

from datetime import datetime, timedelta

app = Flask(__name__)

app.config['SECRET_KEY'] = 'your-secret-key'

def token_required(f):

@wraps(f)

def decorated(*args, **kwargs):

token = request.headers.get('Authorization')

if not token:

return jsonify({'message': 'Token is missing'}), 401

try:

data = jwt.decode(token, app.config['SECRET_KEY'], algorithms=["HS256"])

except:

return jsonify({'message': 'Token is invalid'}), 401

return f(*args, **kwargs)

return decorated

@app.route('/api/users', methods=['GET'])

@token_required

def get_users():

# Database query logic

return jsonify({'users': []}), 200

Deployment Options

Local Deployment:

- Ollama:

ollama pull deepseek-coder:33b - LM Studio: Download quantized versions (Q5, Q4)

- vLLM: Production-grade inference server

Cloud Availability:

- AWS Bedrock: Fully managed DeepSeek Coder

- AWS SageMaker: Custom endpoint deployment

- Hugging Face Inference: Serverless API option

Hardware Requirements:

- Full precision (FP16): 66GB VRAM

- 8-bit quantization: 33GB VRAM (single A6000)

- 4-bit quantization: 18GB VRAM (RTX 4090)

Cost Comparison: DeepSeek vs Cloud APIs

Scenario: 1 million lines of Python code generation

- GPT-5 API: ~$150-$200 (assuming 2M tokens)

- Claude 4 API: ~$180-$220

- Gemini 2.5 API: ~$75-$100

- DeepSeek Coder Local: ~$15 electricity cost (one-time hardware: $3,000-$5,000)

Break-even: After ~20-30 million tokens, local DeepSeek becomes cheaper than cloud APIs.

Best Use Cases:

- Startups needing strong Python AI without large budgets

- Medium-sized companies wanting to reduce API costs

- Python automation and scripting at scale

- Educational institutions teaching Python programming

- Open-source projects requiring code generation

Compare local vs cloud costs with our Local AI vs ChatGPT cost calculator.

Framework-Specific Recommendations

Best AI Models for Django Development

1. Claude 4 Sonnet (Best Overall)

- Complex model relationships and ORM queries

- Django REST framework viewsets and serializers

- Middleware and custom management commands

- Celery background task integration

2. GPT-5 (Best for Rapid Development)

- Django admin customization

- Template and form generation

- Authentication and permission systems

3. Gemini 2.5 (Best for Large Projects)

- Understanding entire Django project structure

- Refactoring across multiple apps

- Migration generation and optimization

Example Django Task: "Create a multi-tenant SaaS blog system with user authentication, post management, comments, and admin interface."

Claude 4 generates: Models with proper relationships, admin configuration, REST API with filtering/pagination, URL routing, template inheritance, and pytest test suite.

Best AI Models for Flask Development

1. GPT-5 (Best Overall)

- Flask blueprint organization

- Extension integration (Flask-SQLAlchemy, Flask-Login)

- API development with Flask-RESTful

2. Claude 4 (Best for Complex Logic)

- Application factory pattern

- Custom decorators and middleware

- Database query optimization

3. DeepSeek Coder (Best Budget Option)

- Simple Flask apps and microservices

- SQLAlchemy ORM queries

- Basic authentication flows

Best AI Models for FastAPI Development

1. Gemini 2.5 (Best Overall)

- Async endpoint design with proper dependency injection

- Pydantic model generation with validation

- OpenAPI documentation customization

2. Claude 4 (Best for Production)

- Background tasks with BackgroundTasks

- WebSocket implementations

- Middleware and CORS configuration

3. GPT-5 (Best for Learning)

- Clear async/await pattern examples

- Well-documented code with type hints

- Integration with popular async libraries (httpx, aiohttp)

Best AI Models for Data Science (pandas, NumPy, scikit-learn)

1. Gemini 2.5 (Best Overall)

- Handling large DataFrames (>100K rows)

- Complex groupby and aggregation operations

- Multi-file data pipeline design

2. Claude 4 (Best for Feature Engineering)

- Statistical feature creation

- Data cleaning and outlier detection

- Time series transformations

3. GPT-5 (Best for Visualization)

- Matplotlib/Seaborn plot generation

- Plotly interactive dashboards

- Clear statistical analysis explanations

Example Data Science Task: "Analyze customer churn dataset, perform EDA, engineer features, train classification model, generate SHAP explanations."

Gemini 2.5 excels: Loads large CSV, comprehensive EDA with visualizations, automated feature engineering, scikit-learn pipeline with cross-validation, hyperparameter tuning, interpretability analysis.

Best AI Models for Machine Learning (PyTorch, TensorFlow)

1. Claude 4 (Best Overall)

- PyTorch model architecture design

- Custom training loops with proper validation

- Learning rate scheduling and optimization

2. Gemini 2.5 (Best for Research)

- Implementing papers from arXiv

- Novel architecture experimentation

- Mathematical deep learning concepts

3. GPT-5 (Best for TensorFlow/Keras)

- Keras Sequential and Functional API

- TensorFlow Dataset pipelines

- Model deployment with TensorFlow Serving

IDE Integration: How to Use AI Models with Python

GitHub Copilot for Python

GitHub Copilot offers the most seamless Python IDE integration:

VS Code Integration:

- Inline completions as you type Python code

- Chat panel for explaining functions and debugging

- Copilot Agent: Assign GitHub issues to AI for autonomous fixes

PyCharm Integration:

- JetBrains native support with context-aware suggestions

- Refactoring assistance for Python projects

- Test generation with pytest

Models Available: GPT-4o (default), Claude 4 Sonnet, o3-mini, Gemini 2.0 Flash (select in settings)

Pricing: $10/month individual, $19/month business

Cursor AI for Python Development

Cursor AI is a VS Code fork optimized for AI-assisted coding:

Python-Specific Features:

@codebase: Ask questions about entire Python project@folders: Reference specific packages or modules@git: Analyze changes and suggest improvements- Parallel agents: Frontend + Backend + Tests simultaneously

Multi-Model Support: Switch between GPT-5, Claude 4, Gemini 2.5, or local models

Pricing: $20/month Pro, $200/month Ultra (20x usage)

Jupyter Notebook Integration

ChatGPT Code Interpreter:

- Upload datasets (CSV, JSON, Excel) directly

- Generate pandas analysis code with visualizations

- Interactive data exploration

Google Colab + Gemini:

- Native Gemini integration in Colab Pro

- Generate code cells from natural language

- Explain existing notebook cells

VS Code Notebooks + Copilot:

- Cell-by-cell AI assistance

- Data visualization suggestions

- Automatic markdown documentation

Local Model Integration

Continue.dev (Open Source):

- Configure Ollama, LM Studio, or vLLM endpoints

- Use CodeLlama or DeepSeek Coder locally

- Complete privacy, zero cloud dependency

Cline (Claude-Specific):

- VS Code extension for Claude API

- Autonomous multi-file editing

- Command-line style interface

Python Version & Package Compatibility

Python 3.11+ Features

Modern AI models understand latest Python features:

Structural Pattern Matching (Python 3.10+):

# AI models generate correct match/case syntax

def http_status(status_code):

match status_code:

case 200:

return "OK"

case 404:

return "Not Found"

case 500 | 502 | 503:

return "Server Error"

case _:

return "Unknown"

Type Hints & Generics (Python 3.12+):

from typing import Generic, TypeVar

T = TypeVar('T')

class Stack(Generic[T]):

def __init__(self) -> None:

self._items: list[T] = []

def push(self, item: T) -> None:

self._items.append(item)

AI models (especially Claude 4 and GPT-5) generate proper type hints consistently.

Popular Package Support

All major AI models have strong understanding of:

Web Frameworks: Django 4.2+, Flask 3.0+, FastAPI 0.100+ Data Science: pandas 2.1+, NumPy 1.26+, scikit-learn 1.3+ ML Frameworks: PyTorch 2.1+, TensorFlow 2.15+, Keras 3.0+ Database: SQLAlchemy 2.0+, Django ORM, Tortoise ORM Async: asyncio, aiohttp, httpx Testing: pytest, unittest, hypothesis DevOps: Click, Typer, boto3 (AWS SDK)

Performance Benchmarks: Python-Specific Tasks

HumanEval Python Subset Results

| Model | HumanEval Score | Pass@1 | Avg. Time to Solution |

|---|---|---|---|

| Claude 4 Sonnet | 82.3% | 82.3% | 3.2s |

| GPT-5 | 78.9% | 78.9% | 2.8s |

| Gemini 2.5 Pro | 76.4% | 76.4% | 4.1s |

| DeepSeek Coder 33B | 71.8% | 71.8% | 5.2s |

| CodeLlama 70B | 68.1% | 68.1% | 6.8s |

| CodeLlama 34B | 62.4% | 62.4% | 7.1s |

| WizardCoder 15B | 65.2% | 65.2% | 8.3s |

Framework-Specific Benchmarks

Django Task Performance (Complex model generation):

- Claude 4: 84% correct on first attempt

- GPT-5: 80% correct

- Gemini 2.5: 78% correct

Pandas Operations (Data cleaning pipeline):

- Gemini 2.5: 88% optimal solutions

- Claude 4: 85% optimal

- GPT-5: 82% optimal

ML Model Implementation (PyTorch from paper):

- Claude 4: 87% architecturally correct

- Gemini 2.5: 85% correct

- GPT-5: 81% correct

Pricing Comparison: What's the Real Cost?

Cloud API Pricing (Per Million Tokens)

| Model | Input Tokens | Output Tokens | Typical Cost/1000 Lines |

|---|---|---|---|

| GPT-5 | $2.50 | $10.00 | ~$0.50 |

| Claude 4 | $3.00 | $15.00 | ~$0.75 |

| Gemini 2.5 | $1.25 | $5.00 | ~$0.30 |

| DeepSeek API | $0.14 | $0.28 | ~$0.05 |

Subscription Pricing

- ChatGPT Plus: $20/month (GPT-5, limited compute)

- ChatGPT Pro: $200/month (GPT-5, extended compute)

- Claude Pro: $20/month (Claude 4 + Extended Thinking)

- Gemini Advanced: $18.99/month (Gemini 2.5 + Google One)

- GitHub Copilot: $10/month individual, $19/month business

- Cursor AI: $20/month Pro, $200/month Ultra

Local Model Costs

Hardware Investment:

- Entry: RTX 4090 (24GB) - $1,599 - runs CodeLlama 13B, WizardCoder 15B

- Mid: RTX 6000 Ada (48GB) - $6,800 - runs DeepSeek 33B, CodeLlama 34B

- High: A100 (80GB) - $10,000+ - runs CodeLlama 70B full precision

Electricity Costs (assuming $0.12/kWh):

- RTX 4090: ~$0.04/hour at 350W load

- A100: ~$0.05/hour at 400W load

Break-Even Analysis:

- Heavy user (100K tokens/day): Local breaks even in 3-6 months

- Medium user (30K tokens/day): Local breaks even in 8-12 months

- Light user (10K tokens/day): Cloud remains cheaper

Calculate your specific scenario with our Local AI vs ChatGPT cost calculator.

Decision Framework: Which Python AI Model Should You Choose?

Choose Claude 4 Sonnet If:

✅ You need the highest quality Python code (77.2% SWE-bench) ✅ Working on complex Django/Flask applications ✅ Require autonomous agents for multi-hour refactoring tasks ✅ Budget allows $3-15 per million tokens ✅ Need best-in-class code review capabilities

Choose GPT-5 If:

✅ Want easiest access via ChatGPT interface ✅ Need rapid prototyping and experimentation ✅ Prefer largest community and ecosystem support ✅ Comfortable with $2.50-10 per million tokens ✅ Value documentation quality and explanations

Choose Gemini 2.5 If:

✅ Working with large Python codebases (50K+ lines) ✅ Doing data science with big datasets (>1GB) ✅ Need to analyze entire Jupyter notebooks ✅ Want cheapest cloud option ($1.25-5/M tokens) ✅ Implementing ML research papers to code

Choose CodeLlama 70B If:

✅ Privacy is mandatory (healthcare, finance, government) ✅ Have appropriate GPU hardware (80GB+ VRAM) ✅ Want zero ongoing costs after hardware investment ✅ Need offline development capability ✅ Can handle 68% HumanEval performance vs 74-77% cloud

Choose DeepSeek Coder 33B If:

✅ Want best price/performance ratio ✅ Have mid-range GPU (48GB VRAM) ✅ Need commercial-friendly license (MIT) ✅ Budget-conscious startup or small team ✅ Can utilize AWS Bedrock for managed hosting

Choose GitHub Copilot If:

✅ Want seamless IDE integration (VS Code, PyCharm) ✅ Prefer autocomplete-first workflow ✅ Need multi-model access (GPT-4o, Claude 4, Gemini) ✅ Comfortable with $10-19/month subscription ✅ Value GitHub ecosystem integration

Advanced Python Techniques with AI Assistants

Decorators and Metaprogramming

AI models handle Python decorators well:

Claude 4 Example - Custom caching decorator:

from functools import wraps

from typing import Callable, Any

import time

def timed_cache(expiry: int = 300):

"""Decorator with expiring cache."""

cache = {}

def decorator(func: Callable) -> Callable:

@wraps(func)

def wrapper(*args, **kwargs) -> Any:

key = (args, tuple(sorted(kwargs.items())))

if key in cache:

result, timestamp = cache[key]

if time.time() - timestamp < expiry:

return result

result = func(*args, **kwargs)

cache[key] = (result, time.time())

return result

return wrapper

return decorator

@timed_cache(expiry=60)

def expensive_computation(n: int) -> int:

return sum(i**2 for i in range(n))

Async/Await Patterns

Modern AI models understand asyncio:

Gemini 2.5 Example - Async web scraper:

import asyncio

import aiohttp

from typing import List

from bs4 import BeautifulSoup

async def fetch_url(session: aiohttp.ClientSession, url: str) -> str:

async with session.get(url) as response:

return await response.text()

async def scrape_multiple_urls(urls: List[str]) -> List[dict]:

async with aiohttp.ClientSession() as session:

tasks = [fetch_url(session, url) for url in urls]

html_contents = await asyncio.gather(*tasks)

results = []

for url, html in zip(urls, html_contents):

soup = BeautifulSoup(html, 'html.parser')

results.append({

'url': url,

'title': soup.find('title').text if soup.find('title') else None

})

return results

# Usage

urls = ['https://example.com', 'https://example.org']

results = asyncio.run(scrape_multiple_urls(urls))

Type Hints and Protocol Classes

GPT-5 Example - Protocol-based design:

from typing import Protocol, runtime_checkable

@runtime_checkable

class Drawable(Protocol):

def draw(self) -> str:

...

class Circle:

def __init__(self, radius: float):

self.radius = radius

def draw(self) -> str:

return f"Circle(radius={self.radius})"

class Rectangle:

def __init__(self, width: float, height: float):

self.width = width

self.height = height

def draw(self) -> str:

return f"Rectangle({self.width}x{self.height})"

def render_shape(shape: Drawable) -> None:

print(shape.draw())

# Works with any class implementing draw()

render_shape(Circle(5.0))

render_shape(Rectangle(3.0, 4.0))

Common Pitfalls & How AI Models Handle Them

Mutable Default Arguments

Problem: Python's notorious mutable default argument trap:

def append_to_list(item, my_list=[]): # BUG!

my_list.append(item)

return my_list

AI Model Solutions:

- Claude 4: Proactively warns about mutable defaults, suggests

Nonewith conditional initialization - GPT-5: Generates correct pattern:

def append_to_list(item, my_list=None): my_list = my_list if my_list is not None else [] - Gemini 2.5: Explains why default is shared across calls, provides correct solution

GIL (Global Interpreter Lock) Awareness

AI models understand Python's GIL limitations:

Claude 4 Recommendation for CPU-bound tasks:

from multiprocessing import Pool

import os

def cpu_intensive_task(n: int) -> int:

return sum(i**2 for i in range(n))

if __name__ == '__main__':

# Use multiprocessing instead of threading for CPU-bound work

with Pool(os.cpu_count()) as pool:

results = pool.map(cpu_intensive_task, [10**7] * 4)

Memory Management for Large Data

Gemini 2.5 generates memory-efficient pandas code:

import pandas as pd

# Memory-efficient CSV reading with dtypes

dtypes = {

'user_id': 'int32', # Instead of default int64

'category': 'category', # Instead of object

'amount': 'float32'

}

df = pd.read_csv('large_file.csv', dtype=dtypes, parse_dates=['date'])

# Process in chunks to avoid memory issues

for chunk in pd.read_csv('huge_file.csv', chunksize=100000):

process_chunk(chunk)

Real-World Success Stories

Startup: SaaS Dashboard Built with Cursor + Claude 4

Project: Customer analytics dashboard for e-commerce Timeline: 4 weeks (estimated 12 weeks manually) Stack: Django, React, PostgreSQL, Celery, Redis

AI Contribution:

- Django models and REST API (80% generated by Claude 4)

- Celery background tasks for data aggregation

- PostgreSQL query optimization suggestions

- React frontend components (separate model)

- pytest test suite with 85% coverage

Developer Quote: "Claude 4 through Cursor reduced our backend development time by 60%. The Django ORM queries it generated were production-ready."

Enterprise: ML Pipeline Modernization with Gemini 2.5

Project: Legacy scikit-learn pipeline to modern PyTorch Timeline: 8 weeks (estimated 24 weeks manually) Scale: 150K lines of Python code analyzed

AI Contribution:

- Analyzed entire legacy codebase with 1M token context

- Generated migration plan with dependency mapping

- Converted feature engineering pipelines

- Implemented PyTorch models with proper training loops

- Created comprehensive unit tests

Team Lead Quote: "Gemini 2.5's ability to understand our entire codebase at once was game-changing. It identified dependencies we'd missed in manual analysis."

Freelancer: Data Analysis Automation with GPT-5

Project: Automated weekly reporting for 12 clients Timeline: 2 weeks to build, saves 20 hours/week Impact: $8,000/month additional revenue capacity

AI Contribution:

- pandas pipelines for each client's data format

- Automated outlier detection and data quality checks

- Plotly visualization templates

- PDF report generation with ReportLab

- Email distribution automation

Developer Quote: "GPT-5 turned repetitive data analysis into reusable Python scripts. I can now serve 3x more clients with the same time investment."

Future of AI for Python Development

Trends to Watch in 2026

1. Autonomous Refactoring Agents

- Multi-day background tasks for large Python codebases

- Automated technical debt reduction

- Self-improving code quality over time

2. Test-Time Compute Scaling

- Models that "think longer" for complex Python problems

- 4x efficiency improvement on algorithmic challenges

- Smaller models outperforming larger ones through extended reasoning

3. Multimodal Python Development

- Video-to-code: Watch tutorial, get working Python script

- Image-to-code: Whiteboard algorithm → Python implementation

- Voice coding: Describe logic verbally, AI writes Python

4. Specialized Python Domain Models

- Fintech-specific models trained on finance libraries (QuantLib, zipline)

- Bioinformatics models for BioPython and genomics workflows

- Quantum computing models for Qiskit and Cirq

5. Local Model Performance Parity

- Open-source models reaching 75%+ SWE-bench by Q2 2026

- Consumer hardware (single RTX 5090) running GPT-4 class models

- Zero-latency local development becoming mainstream

For more predictions, see our AI coding trends 2025 analysis.

Getting Started: Your Python AI Journey

Week 1: Try Cloud Models

Day 1-2: GitHub Copilot Trial

- Install in VS Code or PyCharm

- Write simple Python scripts with autocomplete

- Try chat feature for debugging

Day 3-4: ChatGPT Plus (GPT-5)

- $20/month trial (cancel anytime)

- Generate FastAPI endpoints

- Analyze and refactor existing code

Day 5-7: Claude or Gemini

- Try Claude Pro ($20) or Gemini Advanced ($18.99)

- Test on complex Django project

- Compare explanations vs GPT-5

Week 2: Evaluate Local Options

Day 8-10: Ollama Setup

- Install Ollama on your machine

- Download CodeLlama 13B (if 24GB VRAM) or 7B

- Test on same tasks as cloud models

Day 11-14: Performance Comparison

- Benchmark speed: local vs cloud

- Evaluate code quality differences

- Calculate costs: API fees vs electricity

Week 3: Choose Your Stack

Based on testing, select your primary Python AI tool:

Decision Criteria:

- Code quality requirements (SWE-bench scores)

- Budget constraints (API costs vs hardware)

- Privacy requirements (cloud acceptable?)

- Project complexity (simple scripts vs large Django apps)

- Team size (individual vs enterprise)

Week 4: Optimize Workflow

IDE Integration:

- Set up keyboard shortcuts

- Configure model preferences

- Customize prompts for Python patterns

Best Practices:

- When to trust AI code vs manual review

- Testing strategies for AI-generated Python

- Version control for AI-assisted development

Conclusion: The Right Python AI Model for You

The best AI model for Python development depends entirely on your specific needs:

For Professional Django/Flask Developers: Claude 4 Sonnet delivers the highest quality code with autonomous capabilities that justify the premium pricing.

For Rapid Prototyping & Learning: GPT-5 via ChatGPT Plus offers the most accessible entry point with excellent documentation and community support.

For Data Scientists: Gemini 2.5 Pro's massive context window makes it uniquely suited for large datasets, long notebooks, and complex analysis pipelines.

For Privacy-Conscious Teams: CodeLlama 70B provides strong local performance without cloud dependency, ideal for healthcare, finance, and government sectors.

For Budget-Conscious Developers: DeepSeek Coder 33B offers exceptional value, matching larger models at a fraction of the cost—locally or via AWS Bedrock.

For IDE-Native Experience: GitHub Copilot integrates seamlessly with VS Code and PyCharm, with multi-model support (GPT-4o, Claude 4, Gemini).

The future of Python development is AI-augmented—not AI-replaced. These models excel at boilerplate generation, framework patterns, and routine tasks, freeing developers to focus on architecture, business logic, and creative problem-solving.

Start with a free trial of GitHub Copilot or ChatGPT Plus, test on your real Python projects, and discover how AI transforms your development workflow.

Ready to explore? Check out our complete AI models directory for detailed comparisons, or dive into AI coding tutorials for hands-on implementation guides.

Ready to start your AI career?

Get the complete roadmap

Download the AI Starter Kit: Career path, fundamentals, and cheat sheets used by 12K+ developers.

Want structured AI education?

10 courses, 160+ chapters, from $9. Understand AI, don't just use it.

Continue Your Local AI Journey

Comments (0)

No comments yet. Be the first to share your thoughts!