How Much RAM Do You Need for Local AI? Complete Guide 2025

Before we dive deeper...

Get your free AI Starter Kit

Join 12,000+ developers. Instant download: Career Roadmap + Fundamentals Cheat Sheets.

RAM Requirements for Local AI: Memory Optimization Complete Guide 2025

Published on October 28, 2025 • 20 min read

Understanding RAM requirements for local AI is crucial for optimal performance. This comprehensive guide details memory requirements for running AI models locally, from 7B parameter models needing 8-16GB RAM to 70B models requiring 64GB+ RAM. Learn how to optimize memory usage, select appropriate model sizes, and determine when RAM upgrades provide the best value for your local AI workloads.

💡 Quick Answer

You can run 7B models comfortably with 16 GB RAM, but 30B+ models demand 64 GB or more for smooth inference.

How Much RAM Do You Need for Local AI?

You need 8GB RAM minimum to run small local AI models (up to 7B parameters like Mistral 7B), 16GB RAM for medium models (up to 13B parameters like Llama 2 13B), and 32GB+ RAM for large models (33B+ parameters). Most users achieve excellent results running models like Llama 3.1 8B or Mistral 7B with just 8GB RAM.

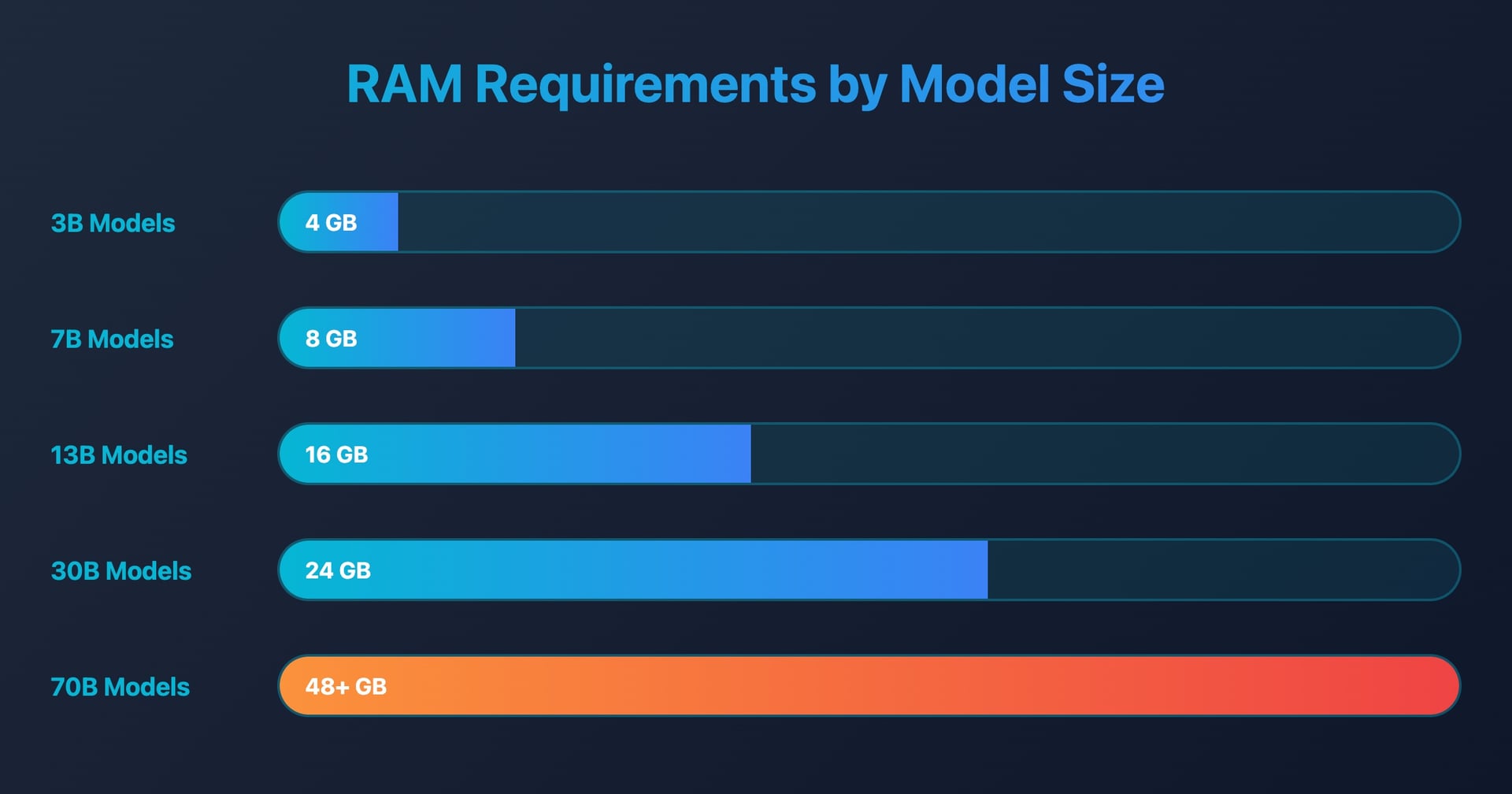

RAM Requirements by Model Size:

| Model Size | RAM Needed | Example Models | Quality Level | Best For |

|---|---|---|---|---|

| 1-3B params | 4GB RAM | TinyLlama, Phi-2 | Basic | Simple tasks, old PCs |

| 7-8B params | 8GB RAM | Llama 3.1 8B, Mistral 7B | Excellent ✅ | Most users |

| 13-14B params | 16GB RAM | Llama 2 13B, Qwen 14B | Superior | Power users |

| 33-40B params | 32GB RAM | CodeLlama 34B, Yi 34B | Expert | Professionals |

| 70B+ params | 64GB+ RAM | Llama 3.1 70B | GPT-4 Level | Enterprise |

Recommendation: Start with 8GB RAM and run Llama 3.1 8B or Mistral 7B for 90% of tasks. Upgrade to 16GB+ only if you need larger models or run multiple models simultaneously.

Quick Summary:

- ✅ Complete RAM requirements by model size and use case

- ✅ Cost-benefit analysis for 8GB, 16GB, 32GB, and 64GB+ configurations

- ✅ Memory optimization techniques for any system

- ✅ Upgrade planning and future-proofing strategies

- ✅ Real-world performance comparisons

RAM is the single most important factor determining which AI models you can run locally. Unlike cloud AI services where memory limitations are hidden, local AI puts you in control—but also requires careful planning. This comprehensive guide will help you determine exactly how much RAM you need and when to upgrade.

Table of Contents

- Understanding AI Memory Requirements

- RAM Requirements by Model Size

- 8GB RAM: What's Possible

- 16GB RAM: The Sweet Spot

- 32GB RAM: Professional Territory

- 64GB+ RAM: Enterprise Level

- Memory Optimization Techniques

- Cost-Benefit Analysis

- Upgrade Planning Strategy

- Future-Proofing Considerations

Understanding AI Memory Requirements {#understanding-memory}

How AI Models Use Memory

When you load an AI model, several memory allocations occur simultaneously:

Base Model Storage:

- Model parameters (weights and biases)

- Vocabulary embeddings

- Architecture metadata

Runtime Memory:

- Context buffer (conversation history)

- Inference calculations

- Temporary variables

- GPU memory transfers (if applicable)

System Overhead:

- Operating system requirements

- Background applications

- Memory fragmentation buffer

Memory Calculation Formula

Total RAM Needed = Model Size + Context Memory + System Overhead + Safety Buffer

Where:

- Model Size = Parameters × Precision (bytes per parameter)

- Context Memory = Context Length × Hidden Size × Layers

- System Overhead = OS (2-4GB) + Apps (1-3GB)

- Safety Buffer = 20-30% of total for stability

📊 Calculate Your Exact Needs: Use our GPU Memory Calculator to determine precise memory requirements for any model configuration.

For detailed technical specifications and memory optimization techniques, the llama.cpp project documentation provides comprehensive information about memory usage patterns and quantization methods used by Ollama and similar tools.

Precision Impact on Memory Usage

| Precision | Bytes per Parameter | Memory Multiplier | Quality Loss |

|---|---|---|---|

| FP32 | 4 bytes | 4.0x | 0% (reference) |

| FP16 | 2 bytes | 2.0x | <1% |

| Q8_0 | 1 byte | 1.0x | 2-3% |

| Q4_K_M | 0.5 bytes | 0.5x | 5-8% |

| Q4_K_S | 0.45 bytes | 0.45x | 8-12% |

| Q2_K | 0.25 bytes | 0.25x | 15-25% |

Context Length Impact

Context Memory Formula:

Memory = Context Length × Model Width × Layers × 2 (bytes)

Examples:

- 2K context: ~50MB additional memory

- 4K context: ~100MB additional memory

- 8K context: ~200MB additional memory

- 16K context: ~400MB additional memory

- 32K context: ~800MB additional memory

RAM Requirements by Model Size {#ram-by-model-size}

Detailed Memory Requirements Table

| Model Size | FP16 Full Precision | Q8_0 8-bit | Q4_K_M 4-bit | Q2_K 2-bit | Recommended RAM | Use Case |

|---|---|---|---|---|---|---|

| 1B parameters | 2.4GB | 1.3GB | 0.7GB | 0.4GB | 4GB min | Mobile, edge |

| 3B parameters | 6.4GB | 3.4GB | 2.0GB | 1.2GB | 8GB min | General use |

| 7B parameters | 14GB | 7.4GB | 4.1GB | 2.6GB | 12GB min | Quality use |

| 13B parameters | 26GB | 14GB | 7.8GB | 4.8GB | 20GB min | Professional |

| 30B parameters | 60GB | 32GB | 18GB | 11GB | 40GB min | High-end |

| 70B parameters | 140GB | 74GB | 42GB | 26GB | 64GB min | Enterprise |

🟢 Efficient

Great performance per GB

🟡 Moderate

Good balance of size/quality

🟠 Heavy

High memory requirements

🔴 Extreme

Enterprise-grade systems only

⏱️ Estimate Training Time: Try our Training Time Estimator to see how long your models will take to train with different RAM configurations.

Real-World Memory Usage Examples

Popular Models with Actual Memory Consumption:

Phi-3 Mini (3.8B):

├── FP16: 7.6GB RAM needed → Requires 12GB+ system

├── Q8_0: 4.0GB RAM needed → Requires 8GB+ system

├── Q4_K_M: 2.3GB RAM needed → Works on 4GB+ system

└── Q2_K: 1.2GB RAM needed → Works on any system

Llama 3.2 7B:

├── FP16: 14GB RAM needed → Requires 20GB+ system

├── Q8_0: 7.4GB RAM needed → Requires 12GB+ system

├── Q4_K_M: 4.1GB RAM needed → Requires 8GB+ system

└── Q2_K: 2.6GB RAM needed → Requires 6GB+ system

Mixtral 8x7B (47B):

├── FP16: 94GB RAM needed → Requires 128GB+ system

├── Q8_0: 50GB RAM needed → Requires 64GB+ system

├── Q4_K_M: 28GB RAM needed → Requires 48GB+ system

└── Q2_K: 18GB RAM needed → Requires 32GB+ system

CodeLlama 34B:

├── FP16: 68GB RAM needed → Requires 96GB+ system

├── Q8_0: 36GB RAM needed → Requires 48GB+ system

├── Q4_K_M: 20GB RAM needed → Requires 32GB+ system

└── Q2_K: 13GB RAM needed → Requires 24GB+ system

Memory Usage During Model Loading

Temporary Memory Spike: During model loading, memory usage can temporarily spike to 1.5-2x the final size:

Loading Process Memory Pattern:

RAM Usage ↑

│ ╭─── Stable Usage (4.1GB)

8GB │ ╱

│ ╱

6GB │ ╱

│ ╱ Peak Loading (6.2GB)

4GB │╱

│

2GB │

└─────────────────────────────→ Time

Start Loading Loaded Running

This means you need more available RAM than the final model size to successfully load.

8GB RAM: What's Possible {#8gb-ram-analysis}

System Breakdown with 8GB Total RAM

8GB RAM Allocation:

├── Operating System: 2.0-3.0GB

├── Background Apps: 1.0-2.0GB

├── Available for AI: 3.0-5.0GB

└── Safety Buffer: 0.5-1.0GB

═══════════════════════════════

Usable for Models: 2.5-4.5GB

Best Models for 8GB Systems

Tier 1: Excellent Performance

# These models run smoothly with excellent quality

ollama pull phi3:mini # 2.3GB (Q4_K_M)

ollama pull llama3.2:3b # 2.0GB (Q4_K_M)

ollama pull gemma:2b # 1.6GB (Q4_K_M)

ollama pull tinyllama # 0.7GB (Q4_K_M)

Tier 2: Good Performance (with optimization)

# These require closing other applications

ollama pull mistral:7b-instruct-q2_k # 2.8GB (Q2_K)

ollama pull codellama:7b-instruct-q2_k # 2.7GB (Q2_K)

ollama pull llama3.2:7b-q2_k # 2.6GB (Q2_K)

Tier 3: Possible but Challenging

# Only with significant system optimization

ollama pull mistral:7b-instruct-q4_k_m # 4.1GB (Q4_K_M)

ollama pull codellama:7b-instruct-q4_k_m # 4.0GB (Q4_K_M)

8GB Performance Benchmarks

| Model | Memory Used | Speed (tok/s) | Quality Score | Stability |

|---|---|---|---|---|

| Phi-3 Mini | 2.3GB | 48 | 8.5/10 | ★★★★★ |

| Llama 3.2 3B | 2.0GB | 52 | 8.8/10 | ★★★★★ |

| Gemma 2B | 1.6GB | 68 | 7.8/10 | ★★★★★ |

| Mistral 7B (Q2_K) | 2.8GB | 42 | 7.2/10 | ★★★★☆ |

| Mistral 7B (Q4_K_M) | 4.1GB | 28 | 8.6/10 | ★★★☆☆ |

8GB Optimization Strategies

Memory Management:

# Essential optimizations for 8GB systems

export OLLAMA_MAX_LOADED_MODELS=1

export OLLAMA_NUM_PARALLEL=1

export OLLAMA_CTX_SIZE=1024 # Reduce context window

# Close memory-heavy applications

pkill firefox chrome # Browsers use 1-3GB

pkill slack discord # Communication apps

pkill spotify # Media players

# Monitor memory usage

watch -n 1 'free -h && ollama ps'

Swap Configuration:

# Create swap file for emergencies (Linux)

sudo fallocate -l 4G /swapfile

sudo chmod 600 /swapfile

sudo mkswap /swapfile

sudo swapon /swapfile

echo '/swapfile none swap sw 0 0' | sudo tee -a /etc/fstab

# Optimize swappiness

echo 'vm.swappiness=10' | sudo tee -a /etc/sysctl.conf

For comprehensive memory management and system optimization guidance, the Linux kernel virtual memory documentation provides detailed explanations of memory management parameters and optimization strategies.

16GB RAM: The Sweet Spot {#16gb-ram-analysis}

System Breakdown with 16GB Total RAM

16GB RAM Allocation:

├── Operating System: 3.0-4.0GB

├── Background Apps: 2.0-4.0GB

├── Available for AI: 8.0-11.0GB

└── Safety Buffer: 1.0-2.0GB

════════════════════════════════

Usable for Models: 7.0-10.0GB

Optimal Models for 16GB Systems

Full Quality 7B Models:

# These run at full quality with excellent performance

ollama pull llama3.2:8b # 4.8GB (Q4_K_M)

ollama pull mistral:7b-instruct-q8_0 # 7.4GB (Q8_0)

ollama pull codellama:7b-instruct-q8_0 # 7.0GB (Q8_0)

ollama pull vicuna:7b-v1.5-q8_0 # 7.2GB (Q8_0)

Medium-Large Models:

# These provide excellent performance

ollama pull llama3.1:13b-q4_k_m # 7.8GB (Q4_K_M)

ollama pull mixtral:8x7b-instruct-q2_k # 18GB (not recommended)

ollama pull wizardlm:13b-q4_k_m # 7.5GB (Q4_K_M)

Multiple Model Setup:

# Can run 2-3 models simultaneously

ollama pull llama3.2:3b # 2.0GB (primary)

ollama pull mistral:7b-instruct-q4_k_m # 4.1GB (quality)

ollama pull codellama:7b-instruct-q4_k_m # 4.0GB (coding)

# Total: ~10GB, leaves 6GB for system

16GB Performance Analysis

| Configuration | Models | Memory Used | Performance | Use Case |

|---|---|---|---|---|

| Single Large | Llama 3.2 8B (Q4_K_M) | 4.8GB | 18 tok/s | Best quality |

| Single Premium | Mistral 7B (Q8_0) | 7.4GB | 19 tok/s | Premium quality |

| Multi-Model | 3B + 7B models | 8.0GB | Variable | Flexibility |

| Specialized | CodeLlama 13B | 7.8GB | 12 tok/s | Professional coding |

16GB Advantages

✅ Run full-quality 7B models without compromise ✅ Multiple models loaded simultaneously ✅ Longer context windows (4K-8K tokens) ✅ Stable performance without memory pressure ✅ Room for other applications while AI runs ✅ Future model support as they become more efficient

32GB RAM: Professional Territory {#32gb-ram-analysis}

System Breakdown with 32GB Total RAM

32GB RAM Allocation:

├── Operating System: 4.0-5.0GB

├── Background Apps: 3.0-6.0GB

├── Available for AI: 20.0-25.0GB

└── Safety Buffer: 2.0-4.0GB

═══════════════════════════════

Usable for Models: 18.0-23.0GB

Professional Models for 32GB Systems

Large Language Models:

# Top-tier models with excellent quality

ollama pull llama3.1:70b-q2_k # 26GB (Q2_K, tight fit)

ollama pull mixtral:8x7b-instruct-q4_k_m # 28GB (Q4_K_M)

ollama pull codellama:34b-instruct-q4_k_m # 20GB (Q4_K_M)

ollama pull wizardlm:30b-q4_k_m # 18GB (Q4_K_M)

Multiple High-Quality Models:

# Professional multi-model setup

ollama pull llama3.2:8b-q8_0 # 8.5GB (premium general)

ollama pull mistral:7b-instruct-q8_0 # 7.4GB (premium efficient)

ollama pull codellama:13b-instruct-q8_0 # 14GB (premium coding)

# Total: ~30GB, can load 2 simultaneously

Specialized Workflows:

# Research/Analysis Setup

ollama pull llama3.1:70b-q2_k # 26GB (analysis)

ollama pull phi3:mini # 2.3GB (quick queries)

# Development Setup

ollama pull codellama:34b-instruct-q4_k_m # 20GB (main coding)

ollama pull llama3.2:8b-q4_k_m # 4.8GB (documentation)

ollama pull phi3:mini # 2.3GB (quick help)

32GB Performance Capabilities

Benchmark Results:

| Model | Memory | Speed | Quality | Context | Use Case |

|---|---|---|---|---|---|

| Mixtral 8x7B | 28GB | 6 tok/s | 9.5/10 | 32K | Top performance |

| CodeLlama 34B | 20GB | 4 tok/s | 9.2/10 | 16K | Professional dev |

| Llama 3.1 70B | 26GB | 8 tok/s | 9.8/10 | 128K | Research grade |

| Multi-setup | 30GB | Variable | 9.0/10 | Variable | Flexibility |

32GB Professional Advantages

✅ Enterprise-grade models like 70B parameter models ✅ Multiple large models running simultaneously ✅ Extended context up to 32K-128K tokens ✅ No memory optimization needed ✅ Professional workflows with specialized models ✅ Future-proof for next 3-5 years

64GB+ RAM: Enterprise Level {#64gb-ram-analysis}

System Breakdown with 64GB+ RAM

64GB RAM Allocation:

├── Operating System: 5.0-6.0GB

├── Background Apps: 4.0-8.0GB

├── Available for AI: 45.0-55.0GB

└── Safety Buffer: 4.0-8.0GB

═══════════════════════════════

Usable for Models: 40.0-50.0GB

128GB RAM Allocation:

├── Operating System: 6.0-8.0GB

├── Background Apps: 6.0-12.0GB

├── Available for AI: 100.0-116.0GB

└── Safety Buffer: 8.0-16.0GB

════════════════════════════════

Usable for Models: 90.0-108.0GB

Enterprise Models for 64GB+ Systems

Flagship Models (64GB):

# Highest quality models available

ollama pull llama3.1:70b-q4_k_m # 42GB (excellent quality)

ollama pull mixtral:8x22b-q2_k # 45GB (if available)

ollama pull codellama:34b-instruct-q8_0 # 36GB (premium coding)

# Multiple large models

ollama pull llama3.1:70b-q2_k # 26GB

ollama pull mixtral:8x7b-instruct-q8_0 # 50GB (not both at once)

Research-Grade Setup (128GB):

# Can run multiple 70B+ models

ollama pull llama3.1:405b-q2_k # 90GB (research grade)

ollama pull llama3.1:70b-q8_0 # 74GB

ollama pull mixtral:8x22b-q4_k_m # 90GB (if available)

ollama pull codellama:70b-q4_k_m # 42GB

# Run 2-3 large models simultaneously

Enterprise Performance Metrics

64GB System Capabilities:

| Configuration | Models | Memory | Performance | Use Case |

|---|---|---|---|---|

| Single Flagship | Llama 3.1 70B (Q4_K_M) | 42GB | 12 tok/s | Best quality |

| Dual Large | 70B + 7B models | 50GB | Variable | Specialized tasks |

| Multi-domain | 4-5 specialized models | 60GB | Variable | Enterprise workflow |

128GB+ System Capabilities:

| Configuration | Models | Memory | Performance | Use Case |

|---|---|---|---|---|

| Ultra Flagship | Llama 3.1 405B | 90GB | 3 tok/s | Research/AGI-level |

| Multi-Flagship | Multiple 70B models | 120GB | Variable | Multi-domain expert |

| Full Ecosystem | 10+ specialized models | 100GB | Variable | Complete AI infrastructure |

Enterprise Advantages

✅ State-of-the-art models (405B parameters) ✅ Research capabilities matching commercial APIs ✅ Multiple expert models for different domains ✅ Unlimited context for complex tasks ✅ No performance compromises ✅ Future-proof for 5+ years ✅ On-premises AI infrastructure

Memory Optimization Techniques {#memory-optimization}

System-Level Optimizations

Operating System Tuning:

# Linux optimizations

echo 'vm.swappiness=1' | sudo tee -a /etc/sysctl.conf

echo 'vm.vfs_cache_pressure=50' | sudo tee -a /etc/sysctl.conf

echo 'vm.dirty_ratio=3' | sudo tee -a /etc/sysctl.conf

echo 'vm.dirty_background_ratio=2' | sudo tee -a /etc/sysctl.conf

# Huge pages for large models

echo 'vm.nr_hugepages=2048' | sudo tee -a /etc/sysctl.conf

# Apply settings

sudo sysctl -p

Memory Monitoring:

# Create memory monitoring script

cat > ~/monitor_memory.sh << 'EOF'

#!/bin/bash

while true; do

clear

echo "=== Memory Status $(date) ==="

free -h

echo

echo "=== AI Models ==="

ollama ps

echo

echo "=== Top Memory Users ==="

ps aux --sort=-%mem | head -10

echo

echo "=== Memory Pressure ==="

cat /proc/pressure/memory 2>/dev/null || echo "Not available"

sleep 5

done

EOF

chmod +x ~/monitor_memory.sh

Application-Level Optimizations

Ollama Configuration:

# Optimize for different RAM sizes

case "$(free -g | awk 'NR==2{print $2}')" in

8) # 8GB system

export OLLAMA_MAX_LOADED_MODELS=1

export OLLAMA_NUM_PARALLEL=1

export OLLAMA_CTX_SIZE=1024

export OLLAMA_BATCH_SIZE=256

;;

16) # 16GB system

export OLLAMA_MAX_LOADED_MODELS=2

export OLLAMA_NUM_PARALLEL=2

export OLLAMA_CTX_SIZE=2048

export OLLAMA_BATCH_SIZE=512

;;

32) # 32GB system

export OLLAMA_MAX_LOADED_MODELS=3

export OLLAMA_NUM_PARALLEL=2

export OLLAMA_CTX_SIZE=4096

export OLLAMA_BATCH_SIZE=1024

;;

*) # 64GB+ system

export OLLAMA_MAX_LOADED_MODELS=5

export OLLAMA_NUM_PARALLEL=4

export OLLAMA_CTX_SIZE=8192

export OLLAMA_BATCH_SIZE=2048

;;

esac

Model Loading Strategy:

# Smart model loading based on available memory

smart_load() {

local model="$1"

local available=$(free -m | awk 'NR==2{print $7}')

echo "Available memory: ${available}MB"

if [ "$available" -lt 3000 ]; then

echo "Low memory - clearing cache first"

ollama stop --all

sleep 2

sudo sync && echo 3 | sudo tee /proc/sys/vm/drop_caches > /dev/null

fi

echo "Loading model: $model"

ollama run "$model" "Hello" > /dev/null &

# Monitor loading process

while ! ollama ps | grep -q "$model"; do

echo "Loading..."

sleep 1

done

echo "Model loaded successfully"

ollama ps

}

Emergency Memory Recovery

# Emergency memory cleanup script

emergency_cleanup() {

echo "🚨 Emergency memory cleanup started..."

# Stop all AI models

ollama stop --all

# Kill memory-heavy processes

pkill -f "chrome|firefox|slack|discord|spotify"

# Clear system caches

sudo sync

echo 3 | sudo tee /proc/sys/vm/drop_caches > /dev/null

# Force garbage collection

python3 -c "import gc; gc.collect()"

# Show results

echo "✅ Cleanup complete"

free -h

}

Cost-Benefit Analysis {#cost-benefit-analysis}

RAM Upgrade Costs (2025 Prices)

Desktop DDR4/DDR5 Pricing:

8GB → 16GB upgrade:

├── DDR4-3200: $30-50

├── DDR5-4800: $40-60

├── Installation: DIY (free) or $20-30

└── Total cost: $30-80

16GB → 32GB upgrade:

├── DDR4-3200: $60-100

├── DDR5-4800: $80-120

├── Installation: DIY (free) or $20-30

└── Total cost: $60-150

32GB → 64GB upgrade:

├── DDR4-3200: $150-250

├── DDR5-4800: $200-300

├── Installation: DIY (free) or $30-50

└── Total cost: $150-350

Laptop RAM Pricing (if upgradeable):

8GB → 16GB upgrade:

├── SO-DIMM DDR4: $40-70

├── SO-DIMM DDR5: $50-80

├── Professional install: $50-100

└── Total cost: $40-180

Many modern laptops have soldered RAM

Check compatibility before purchasing

Performance Gains per Dollar

8GB → 16GB Upgrade ($30-80 investment):

- ✅ 7B models at full quality (Q8_0 vs Q2_K)

- ✅ Multiple models loaded simultaneously

- ✅ Longer context windows (2K → 4K+ tokens)

- ✅ Better system stability during AI tasks

- 📊 ROI: Excellent - dramatic capability increase

16GB → 32GB Upgrade ($60-150 investment):

- ✅ 13B-30B models accessible

- ✅ Professional workflows with specialized models

- ✅ Extended context (8K+ tokens)

- ✅ No memory optimization needed

- 📊 ROI: Good - significant capability increase

32GB → 64GB Upgrade ($150-350 investment):

- ✅ 70B models for enterprise-grade responses

- ✅ Multiple large models simultaneously

- ✅ Research-grade capabilities

- ✅ Future-proofing for 3-5 years

- 📊 ROI: Moderate - specialized use cases

Alternative Investment Comparison

$100 Budget Options:

Option A: 8GB → 16GB RAM upgrade

├── Capability: 3B → 7B models at full quality

├── Performance: 2-3x improvement

├── Use cases: General users, hobbyists

└── Recommendation: ★★★★★

Option B: Entry GPU (used GTX 1660)

├── Capability: Same models, 2-3x speed

├── Performance: Faster inference only

├── Use cases: Speed-focused users

└── Recommendation: ★★★☆☆

Option C: Cloud AI credits

├── Capability: Access to largest models

├── Performance: Fast but limited usage

├── Use cases: Occasional heavy tasks

└── Recommendation: ★★☆☆☆

Conclusion: RAM upgrades provide the best value for most users.

Break-Even Analysis for Different Users

Hobbyist/Student:

- Current: 8GB system, limited to 3B models

- Upgrade: $50 for 16GB → Access to 7B models

- Break-even: Immediate (dramatic capability increase)

- Recommendation: Essential upgrade

Professional Developer:

- Current: 16GB system, good for 7B models

- Upgrade: $100 for 32GB → Access to 30B coding models

- Break-even: 2-3 months vs cloud coding assistants

- Recommendation: Highly recommended

Enterprise/Researcher:

- Current: 32GB system, good for most tasks

- Upgrade: $300 for 64GB → Access to 70B models

- Break-even: 6-12 months vs API costs for equivalent quality

- Recommendation: Case-by-case basis

Upgrade Planning Strategy {#upgrade-planning}

Upgrade Decision Framework

Step 1: Assess Current Usage

# Run usage assessment script

cat > ~/assess_usage.sh << 'EOF'

#!/bin/bash

echo "=== Current System Assessment ==="

echo "Total RAM: $(free -h | awk 'NR==2{print $2}')"

echo "Available RAM: $(free -h | awk 'NR==2{print $7}')"

echo "Current models:"

ollama list

echo -e "

=== Usage Patterns ==="

echo "What do you primarily use AI for?"

echo "1. General conversation and Q&A"

echo "2. Programming and code generation"

echo "3. Writing and content creation"

echo "4. Research and analysis"

echo "5. Multiple professional tasks"

echo -e "

=== Performance Issues ==="

echo "Do you experience:"

echo "- Slow model loading? (need more RAM)"

echo "- Can't run desired models? (need more RAM)"

echo "- Slow inference? (need GPU or more RAM)"

echo "- System instability? (need more RAM)"

EOF

chmod +x ~/assess_usage.sh

./assess_usage.sh

Step 2: Identify Target Capabilities

Usage-Based Recommendations:

General Users (Chat, Q&A):

├── Current: 8GB → Target: 16GB

├── Models: 3B → 7B full quality

├── Investment: $50-80

└── Timeline: Immediate (high impact)

Developers (Coding assistance):

├── Current: 16GB → Target: 32GB

├── Models: 7B → 13B/30B specialized

├── Investment: $100-150

└── Timeline: 3-6 months

Content Creators (Writing, marketing):

├── Current: 8GB → Target: 16GB

├── Models: 3B → 7B creative models

├── Investment: $50-80

└── Timeline: Immediate

Researchers (Analysis, academic):

├── Current: 16GB → Target: 64GB

├── Models: 7B → 70B research-grade

├── Investment: $200-350

└── Timeline: 6-12 months

Enterprise (Multiple domains):

├── Current: 32GB → Target: 128GB

├── Models: Multiple large models

├── Investment: $500-1000

└── Timeline: Budget-dependent

Upgrade Timing Strategy

Phase 1: Essential Upgrade (Priority 1)

- 8GB → 16GB: Enable full-quality 7B models

- Timeline: ASAP (dramatically improves capabilities)

- Cost: $50-80

- Impact: High

Phase 2: Professional Upgrade (Priority 2)

- 16GB → 32GB: Enable 13B-30B professional models

- Timeline: 6-12 months after Phase 1

- Cost: $100-150

- Impact: Medium-High

Phase 3: Enterprise Upgrade (Priority 3)

- 32GB → 64GB+: Enable 70B+ enterprise models

- Timeline: 12-24 months after Phase 2

- Cost: $200-500+

- Impact: Medium (specialized use cases)

Budget-Conscious Strategies

Gradual Upgrade Path:

# Start with optimization instead of hardware

# Month 1-2: Optimize current system

optimize_current() {

# Close unnecessary apps

# Use quantized models efficiently

# Set up swap space

# Monitor memory usage

}

# Month 3-4: Small upgrade if budget allows

small_upgrade() {

# 8GB → 12GB or 16GB

# Often just adding one stick

# Immediate capability improvement

}

# Month 6-12: Major upgrade when budget permits

major_upgrade() {

# Replace all RAM

# 16GB → 32GB or 32GB → 64GB

# Plan for future needs

}

Used/Refurbished Options:

Cost Savings on Used RAM:

├── Desktop DDR4: 30-50% savings

├── Server RAM: 50-70% savings (if compatible)

├── Laptop SO-DIMM: 20-40% savings

└── Considerations: Test thoroughly, check compatibility

Future-Proofing Considerations {#future-proofing}

AI Model Trends (2025-2030)

Efficiency Improvements:

- Better quantization: Q3_K, improved Q2_K quality

- Architecture advances: MoE (Mixture of Experts) models

- Specialized models: Task-specific smaller models

- Compression techniques: Model pruning and distillation

Expected RAM Requirements Evolution:

2025: Current state

├── 3B models: 2GB RAM

├── 7B models: 4GB RAM

├── 13B models: 8GB RAM

└── 30B models: 18GB RAM

2027: Improved efficiency (+50% performance/RAM)

├── 3B models: Better than current 7B

├── 7B models: Better than current 13B

├── 13B models: Better than current 30B

└── New 70B models: Better than current 405B

2030: Next-generation architectures

├── Dramatically more efficient

├── Multimodal standard (text, image, audio)

├── Specialized accelerators common

└── 8GB systems run today's "enterprise" models

Hardware Evolution Impact

DDR5 Mainstream Adoption (2025-2026):

- Higher bandwidth: Better performance for AI workloads

- Larger capacities: 32GB/64GB modules become affordable

- Better efficiency: Lower power consumption

LPDDR6 and DDR6 (2027-2030):

- Massive bandwidth: 10x current DDR4 speeds

- Unified memory: CPU/GPU shared memory architectures

- AI acceleration: Built-in AI processing units

Recommended Future-Proofing Strategy

Conservative Approach (Budget-conscious):

Timeline: 2025-2028

Phase 1 (2025):

├── Upgrade to 16GB DDR4

├── Cost: $50-80

├── Capability: Handle current 7B models well

└── Future: Will handle 2027's improved 7B models excellently

Phase 2 (2027):

├── Upgrade to 32GB DDR5 (when affordable)

├── Cost: $100-150 (projected)

├── Capability: Handle 2027's 13B-30B models

└── Future: Well-positioned for 2030 models

Expected longevity: 5-7 years

Aggressive Approach (Future-ready):

Timeline: 2025-2030+

Phase 1 (2025):

├── Upgrade to 64GB DDR5

├── Cost: $300-500

├── Capability: Handle any current model

└── Future: Handle 2030's models without issue

Phase 2 (2028-2030):

├── Consider DDR6 when mature

├── Cost: TBD

├── Capability: Next-generation AI workloads

└── Future: 10+ year longevity

Expected longevity: 8-10 years

Technology Transition Planning

Platform Upgrade Considerations:

Current System Assessment:

DDR3 Systems (Pre-2015):

├── Recommendation: Full system upgrade

├── Reason: Platform limitations beyond just RAM

├── Target: Modern DDR4/DDR5 system

└── Timeline: As soon as budget allows

DDR4 Systems (2015-2022):

├── Recommendation: RAM upgrade first

├── Reason: Platform still capable

├── Target: 32-64GB DDR4

└── Timeline: Platform upgrade in 3-5 years

DDR5 Systems (2022+):

├── Recommendation: RAM upgrade only

├── Reason: Future-ready platform

├── Target: 32-128GB DDR5

└── Timeline: 5-8 years before next platform

Quick Decision Guide

"How much RAM do I need?" - Quick Answers

For Different Use Cases:

Casual User (Chat, simple Q&A):

- Minimum: 8GB (with optimization)

- Recommended: 16GB

- Models: Phi-3 Mini, Llama 3.2 3B

Student/Hobbyist (Learning, experimentation):

- Minimum: 16GB

- Recommended: 32GB

- Models: Llama 3.2 7B, Mistral 7B

Professional Developer:

- Minimum: 32GB

- Recommended: 64GB

- Models: CodeLlama 13B-34B, Llama 3.2 8B

Content Creator (Writing, marketing):

- Minimum: 16GB

- Recommended: 32GB

- Models: Mistral 7B, Llama 3.2 8B

Researcher/Analyst:

- Minimum: 32GB

- Recommended: 64GB+

- Models: Llama 3.1 70B, specialized research models

Enterprise/Multi-user:

- Minimum: 64GB

- Recommended: 128GB+

- Models: Multiple large models, Llama 3.1 405B

Upgrade Priority Matrix

Current RAM → Upgrade Priority → Expected Improvement

4GB → CRITICAL → Basic AI capability

8GB → HIGH → Full-quality 7B models

16GB → MEDIUM → Professional models (13B-30B)

32GB → LOW → Enterprise models (70B+)

64GB+ → OPTIONAL → Research/multi-model setups

Frequently Asked Questions

Q: Is 8GB RAM enough for local AI in 2025?

A: Yes, but with limitations. You can run 3B models excellently and 7B models with heavy quantization (Q2_K). For the best experience, 16GB is recommended.

Q: What's the difference between running a 7B model in Q4_K_M vs Q2_K?

A: Q4_K_M uses ~4GB RAM with 80% of original quality, while Q2_K uses ~2.6GB with 50-60% quality. The difference is noticeable in complex reasoning and creative tasks.

Q: Should I upgrade RAM or buy a GPU first?

A: Upgrade RAM first. It enables you to run larger, higher-quality models. A GPU only speeds up inference but doesn't improve model quality or enable larger models.

Q: Can I run multiple AI models simultaneously?

A: Yes, if you have sufficient RAM. Each model needs its full memory allocation. With 32GB, you can run 2-3 models (7B + 3B + 3B). With 64GB, you can run multiple large models.

Q: How much does RAM speed (MHz) affect AI performance?

A: RAM speed has a moderate impact (10-20% performance difference). Capacity is more important than speed. DDR5 provides better performance than DDR4, but DDR4 is still excellent.

Q: Will future AI models require less RAM?

A: Yes, efficiency is improving. Models are becoming more capable per parameter, and quantization is improving. However, demand for larger models is also growing, so more RAM remains beneficial.

Conclusion

RAM is the foundation of your local AI experience. While you can start with 8GB and optimization techniques, upgrading to 16GB provides the most dramatic improvement in capabilities and user experience. The sweet spot for most users is 16-32GB, which handles current and near-future AI models comfortably.

Key Takeaways:

- 8GB: Possible but limited to small models

- 16GB: Excellent for most users and 7B models

- 32GB: Professional territory with 13B-30B models

- 64GB+: Enterprise and research capabilities

Remember that RAM upgrades are usually the most cost-effective way to improve your local AI capabilities. The investment in more memory pays dividends across all AI tasks and future-proofs your system for years to come.

Plan your upgrades strategically, starting with the most impactful improvements first. Most users should prioritize reaching 16GB, then consider 32GB based on their specific needs and use cases.

Ready to upgrade your AI capabilities? Join our newsletter for RAM optimization guides, model recommendations for your specific setup, and alerts when new efficient models are released.

Ready to start your AI career?

Get the complete roadmap

Download the AI Starter Kit: Career path, fundamentals, and cheat sheets used by 12K+ developers.

Want structured AI education?

10 courses, 160+ chapters, from $9. Understand AI, don't just use it.

Continue Your Local AI Journey

Comments (0)

No comments yet. Be the first to share your thoughts!